Introduction

When building applications powered by large language models (LLMs), one of the most critical early decisions is which model to use. This choice influences not only the accuracy and usefulness of your system but also its scalability, cost structure, and user experience. For many developers, the tension lies in balancing performance (reasoning, coherence, reliability) with cost (token usage, compute time, latency).

As OpenAI’s ecosystem has grown from GPT-3 to GPT-4o and beyond, developers now have access to a spectrum of models — from lightweight “mini” models optimized for cost and speed, to flagship models offering state-of-the-art reasoning and multimodal understanding. Choosing wisely requires an understanding of both technical trade-offs and business economics.

This guide provides an in-depth exploration of OpenAI’s model lineup, cost structures, and performance characteristics. We’ll also cover real-world use cases, implementation strategies, and future outlooks, helping you make informed decisions for your specific application.

1. The Evolution of OpenAI Models

The trajectory of OpenAI’s model development reflects a constant push for greater capability, accessibility, and efficiency.

- GPT-3 (2020) brought the first mainstream wave of large language models. While powerful, it was relatively expensive and limited in context length, and developers often had to work around its quirks.

- GPT-3.5 (2022) offered dramatic improvements in fluency, usability, and cost-efficiency. It became the foundation of many chatbots and consumer applications due to its affordability.

- GPT-4 (2023) marked a leap forward in reasoning, reliability, and problem-solving, particularly in coding, mathematics, and complex reasoning tasks.

- GPT-4o (2024) introduced multimodality at scale. It handles text, vision, and audio seamlessly, optimized for real-world interactive applications. It also achieves lower latency than GPT-4.

- Mini models like GPT-4o-mini emerged to meet demand for real-time, cost-sensitive tasks, providing strong baseline performance at a fraction of the price.

This evolution means developers now face a rich but complex landscape where no single model is “best” for all situations. Instead, the best choice depends on your use case, constraints, and scale.

2. Model Families and Their Capabilities

GPT-4o (Omni Models)

The flagship model family, GPT-4o, supports text, vision, and audio. It excels in:

- Complex reasoning across modalities (e.g., describing images, analyzing charts, handling multimodal inputs).

- Long context windows, enabling ingestion of documents, transcripts, or large datasets.

- Real-time applications, where streaming output and reduced latency matter.

Best suited for: mission-critical applications, research, multimodal apps, advanced coding assistants.

GPT-4o-mini

A smaller, faster, cheaper sibling of GPT-4o. While less powerful in edge-case reasoning, it:

- Provides high-quality results for most conversational and lightweight reasoning tasks.

- Costs ~10x less per token compared to GPT-4o.

- Has reduced latency, making it ideal for interactive apps and chatbots.

Best suited for: large-scale deployment, high-volume customer interactions, real-time workflows.

GPT-4 (Legacy Text-only Models)

These models remain valuable when:

- High reasoning quality is required in text-only domains.

- Long context support (in some variants) is useful for enterprise summarization.

- A stable, proven text-only model is preferred.

Best suited for: coding assistants, legal/research analysis, text-heavy enterprise tasks.

GPT-3.5

While weaker than GPT-4 families, GPT-3.5 offers:

- Extremely low cost per token.

- Fast response times.

- Adequate performance for casual conversation, simple summarization, and low-stakes tasks.

Best suited for: early-stage prototypes, budget-constrained startups, simple assistants.

Embedding Models

Optimized for vector representations of text.

- Power semantic search, clustering, retrieval-augmented generation (RAG).

- Cost only a fraction of generative models.

- Crucial for applications where search and context retrieval precede reasoning.

Best suited for: semantic search engines, recommender systems, hybrid RAG pipelines.

Moderation Models

Specialized lightweight models that classify content as safe/unsafe.

- Extremely cheap and scalable.

- Designed to run inline with every request at production scale.

Best suited for: compliance layers, user-facing chatbots, content platforms.

Quick Comparison Table

To make the differences easier to digest, here’s a quick side-by-side comparison:

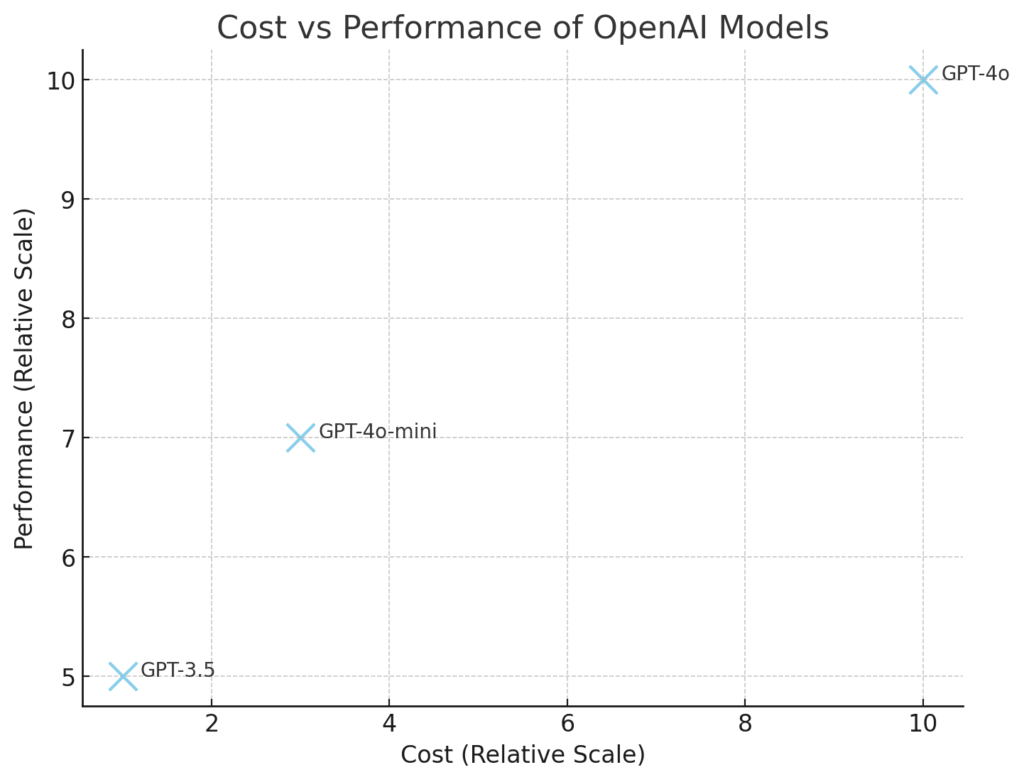

| Model | Cost | Performance | Latency | Context Length | Multimodality |

|---|---|---|---|---|---|

| GPT-3.5 | Lowest | Basic reasoning, good fluency | Low | Shorter | No |

| GPT-4o-mini | Medium (~3x GPT-3.5) | Strong baseline, faster & cheaper | Very Low | Medium | Yes (basic) |

| GPT-4o | Highest (~10x GPT-3.5) | Best reasoning, multimodal, long context | Higher (streaming) | Longest | Yes (advanced) |

3. Pricing Models and Cost Structures

OpenAI charges per token, for both input and output. One token is roughly 4 characters of English text, or about ¾ of a word.

Cost Breakdown (illustrative ranges)

- GPT-4o: Premium cost — best reserved for high-value queries.

- GPT-4o-mini: ~10x cheaper, enabling mass-scale deployments.

- GPT-3.5: One of the cheapest, excellent for prototypes and budget-sensitive tasks.

- Embeddings: Orders of magnitude cheaper than generative models.

- Moderation: Negligible cost.

Example Calculation:

A customer support bot handling 100,000 daily queries averaging 300 tokens per query:

- GPT-4o: Costs could exceed $15,000/month.

- GPT-4o-mini: Roughly $1,500/month.

- GPT-3.5: ~$500/month.

This example illustrates why mini models are critical for scalability, while flagship models are reserved for special cases.

4. Performance Characteristics

Performance is multidimensional. Choosing a model means balancing:

Accuracy & Reasoning

- GPT-4o demonstrates superior performance on benchmarks (e.g., MMLU, code tests).

- GPT-4o-mini often achieves “good enough” performance for non-critical tasks.

- GPT-3.5 lags in multi-step reasoning but shines in cost-efficiency.

Latency & Throughput

- Smaller models respond faster.

- GPT-4o-mini is well suited for real-time UIs.

- Flagship models may have noticeable delay unless streamed.

Context Length

- GPT-4o supports long contexts, critical for summarizing large documents.

- GPT-3.5 often maxes out sooner, limiting complex RAG scenarios.

Multimodality

- GPT-4o is uniquely capable with images and audio.

- GPT-4o-mini supports multimodality but at lower resolution in reasoning.

Energy & Compute Efficiency

- Smaller models reduce infrastructure load.

- For edge deployments, efficiency often outweighs raw performance.

5. Use Case Deep Dive

Conversational AI

- Mini models: Perfect for customer support, FAQs, and virtual assistants.

- Flagship models: Reserved for nuanced, sensitive, or high-stakes conversations (health, law, finance).

Enterprise Document Summarization

- GPT-4o: Handles long contracts, technical reports, research papers.

- GPT-3.5: Works for short, simple documents.

Software Engineering

- GPT-4o: Best for debugging, code synthesis, handling ambiguous tasks.

- GPT-4o-mini: Automates boilerplate, small snippets.

Knowledge Retrieval & Semantic Search

- Embeddings: Provide the backbone of scalable retrieval.

- GPT-4o: Adds reasoning and re-ranking.

Real-Time Applications

- Latency-sensitive apps (games, AR/VR, live assistants) benefit from GPT-4o-mini.

- Critical queries can be escalated to GPT-4o on demand.

Safety & Compliance

- Always layer in moderation models.

- Avoid routing sensitive content to weaker models without filtering.

6. Practical Decision Framework

How should a developer decide?

- Start with the flagship (GPT-4o). Prototype and test user experience.

- Measure costs and latency under realistic load.

- Experiment with mini/3.5 models — determine if they meet baseline requirements.

- Adopt hybrid workflows:

- Route low-risk queries to GPT-4o-mini.

- Escalate complex cases to GPT-4o.

- Use embeddings for context retrieval.

- Layer moderation throughout.

- Forecast at scale: simulate costs for 10k, 100k, 1M queries/month.

7. Developer Implementation Strategies

Prompt Engineering for Efficiency

- Use precise prompts to reduce wasted tokens.

- Apply structured prompting (system + user roles).

Caching & Reuse

- Cache embeddings and model outputs where possible.

- Reuse summaries or generated content for repeated queries.

Hybrid Model Chaining

- Example: embeddings → GPT-4o-mini for summarization → GPT-4o for final verification.

Fine-tuning vs Prompt Optimization

- Fine-tuning can reduce cost by making smaller models behave more consistently.

- Prompt optimization is cheaper initially, but less predictable.

Monitoring & Telemetry

- Track token usage in logs.

- Build dashboards for cost forecasting.

- Monitor model errors and hallucinations.

8. Case Studies

SaaS Chatbot Startup

- Initially launched with GPT-4o for accuracy.

- Costs ballooned as user base grew.

- Switched to GPT-4o-mini for 90% of queries, kept GPT-4o for escalations.

- Reduced monthly spend by 70% without major quality loss.

Enterprise Search

- Used embeddings to vectorize knowledge base.

- GPT-4o used for re-ranking and complex reasoning.

- Achieved balance of cost-efficiency and accuracy.

Coding Assistant

- Daily tasks routed through GPT-4o-mini (faster, cheaper).

- GPT-4o invoked for debugging or multi-file reasoning.

Research Pipeline

- GPT-4o saved researchers hours by summarizing long papers.

- Even with higher cost, efficiency gains justified spend.

9. Future Outlook

The future of OpenAI models will likely bring:

- Mixture-of-Experts Models: Dynamic routing where specialized sub-models handle parts of the workload.

- Cheaper inference: Hardware and algorithmic improvements lowering cost per token.

- Greater multimodal integration: Models seamlessly combining text, vision, audio, video.

- Hybrid ecosystems: Mix of open-source smaller models and API-based premium models.

- Adaptive model routing: Automated systems deciding in real-time which model to use.

Conclusion

Choosing the right OpenAI model is not about finding “the best” model universally — it’s about matching the model to your use case, budget, and performance needs.

- GPT-4o: unmatched reasoning and multimodal abilities, but costly.

- GPT-4o-mini: excellent balance for scale.

- GPT-3.5: budget-friendly for simpler tasks.

- Embeddings: essential for retrieval and search.

- Moderation: critical for safety at scale.

The most effective strategy for developers is often hybrid: use cheaper models for routine tasks, escalate to more powerful models for critical moments, and integrate embeddings for retrieval efficiency.

In short: Prototype with the flagship, scale with the mini, and always monitor costs.

Comments