Introduction

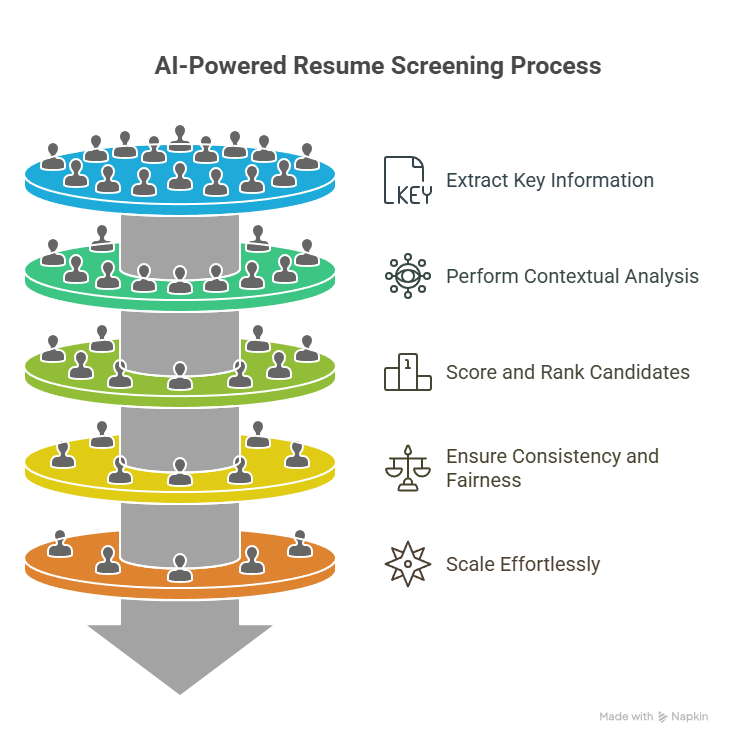

AI job application screener solutions are transforming recruitment by automating the tedious process of resume parsing, candidate evaluation, and ranking. Recruiters often face an overwhelming volume of applications for each job opening, making manual screening slow, inconsistent, and prone to human error. Important candidate details can be missed due to fatigue or unconscious bias, leading to less effective hiring decisions. Automating this screening process helps quickly and accurately analyze large applicant pools, saving valuable time and improving hiring quality.

Artificial intelligence, particularly natural language processing (NLP), enables machines to understand resumes much like a human recruiter would. An AI-powered screener can:

This blog will guide you through building an MVP AI job application screener using OpenAI API and Flask, helping you streamline your hiring process efficiently.

Overview of Using OpenAI API for AI-Powered Job Screening

The OpenAI API offers powerful language models capable of comprehending complex human language. These models excel in tasks like text classification, summarization, and semantic understanding, making them ideal for resume parsing and candidate evaluation.

By integrating OpenAI’s API with a lightweight web framework like Flask, developers can quickly build a minimum viable product (MVP) that automates resume screening. This approach leverages AI to parse candidate documents, analyze qualifications against job descriptions, and generate ranked lists of applicants—streamlining the recruitment workflow for better outcomes.

In this blog, we will guide you through the process of setting up such an MVP using Flask and OpenAI API, focusing on simplicity and practical functionality.

If you want to familiarize yourself with OpenAI API basics before diving in, check out our detailed tutorial: How to Use OpenAI O1 and O1-Mini.

2. Understanding the Problem

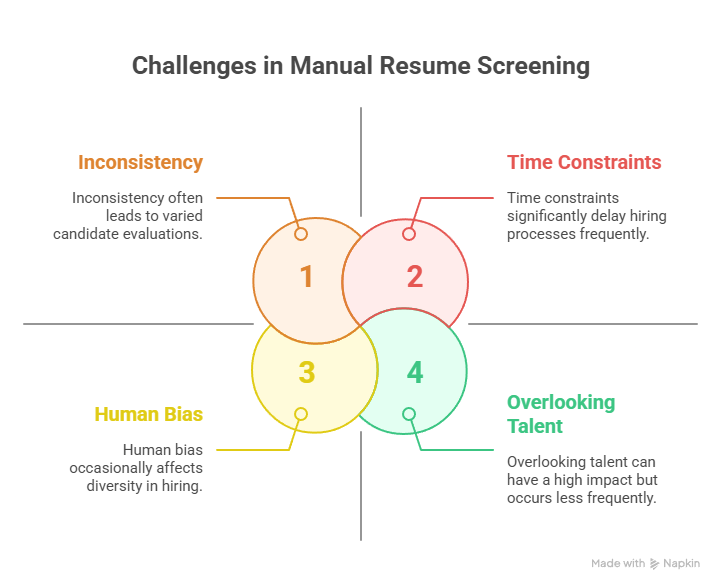

Challenges in Manual Resume Screening

Recruiters and hiring managers often struggle with the tedious and time-consuming task of manually reviewing large volumes of resumes. This process can lead to several challenges, including:

Common Pain Points in Recruitment Workflows

Beyond resume screening, recruiters face workflow challenges such as:

- Lack of Standardization: Without clear, consistent criteria, screening becomes subjective and error-prone.

- Delayed Feedback: Candidates often wait long periods for updates, harming the employer brand.

- Scalability Issues: As organizations grow, existing manual processes fail to handle increasing applicant numbers efficiently.

- Difficulty in Prioritizing: It’s hard to quickly identify top candidates amidst a large pool.

- Integration Gaps: Recruitment tools and applicant tracking systems (ATS) may lack seamless automation for screening and ranking.

What AI Can Solve

Artificial intelligence, especially through natural language processing (NLP), addresses these challenges by:

- Automating Resume Parsing: AI quickly extracts relevant information, reducing manual workload.

- Standardizing Evaluations: Applying consistent criteria to all candidates removes subjectivity.

- Reducing Bias: Objective algorithms evaluate candidates solely on qualifications and experience.

- Scaling Efficiently: AI handles large applicant volumes without fatigue or error.

- Ranking Candidates: AI assigns scores to candidates based on how well they match job requirements, making prioritization straightforward.

- Improving Candidate Experience: Faster, automated responses and transparent screening build trust.

By leveraging AI, organizations can transform recruitment from a manual bottleneck into a streamlined, data-driven process that identifies the best talent quickly and fairly.

3. Architecture Overview

High-Level System Design for the AI-Powered Screener

The AI-powered job application screener is structured as a clear, modular pipeline that automates the journey from receiving raw resumes to delivering ranked candidate lists. This design ensures easy scalability and integration with existing recruitment workflows.

Core Components

- Input

Candidates upload resumes and cover letters in formats such as PDF, DOCX, or plain text through a simple web interface built with HTML, CSS, and JavaScript. - Preprocessing

The backend extracts clean text from uploaded documents using Python libraries likepdfplumberandpython-docx, preparing the data for AI analysis. - AI Analysis

The extracted text is sent to the OpenAI API, which interprets the candidate’s skills and experience in relation to the job description using advanced natural language understanding. - Scoring

The system generates a suitability score reflecting how closely each candidate matches the job requirements, considering factors like skill relevance and experience. - Output

Candidates are stored and ranked in a MySQL database. Recruiters access a simple, responsive dashboard to view candidate rankings and detailed analysis, powered by basic HTML, CSS, and JavaScript.

Technology Stack

- Backend: Flask (Python) for handling API requests, document processing, AI calls, and database interactions.

- AI Service: OpenAI API for semantic resume analysis and scoring.

- Document Parsing:

pdfplumberandpython-docxlibraries for extracting resume content. - Database: MySQL for persistent storage of candidate data, scores, and related metadata.

- Frontend: Basic HTML, CSS, and JavaScript to build a lightweight recruiter interface.

- Deployment: Local or cloud-based hosting (Heroku, AWS, etc.) to run the MVP.

This stack strikes a balance between simplicity and functionality, ideal for developing a robust MVP that efficiently automates job application screening.

4. Step 1: Extracting Text from Resumes

The first critical step in building an AI-powered job application screener is reliably extracting text content from resumes. Candidates submit their resumes in various formats, predominantly PDFs and Word documents, which often contain complex layouts, multiple pages, tables, and images. Accurate text extraction ensures the AI model receives clean, structured input to analyze candidates effectively.

Why Text Extraction Matters

Raw resumes are often formatted for human reading, not machine processing. For example, a PDF might include multiple columns, headers, footers, or embedded images, which can confuse simple text extractors. Misinterpreted text can lead to inaccurate candidate assessments downstream. Hence, robust preprocessing using specialized libraries is essential.

Supported Resume Formats

- PDF: The most common format but can have complex layouts. Requires careful extraction to preserve text order.

- DOCX: Microsoft Word documents, more structured but still require parsing to handle styles and formatting.

- Plain Text (.txt): Simplest to handle but rarely used for resumes.

Choosing the Right Libraries for Extraction

For the Flask backend in Python, the following libraries are recommended:

pdfplumber:- Built specifically to handle PDF files with complex layouts.

- Extracts text page by page, preserving reading order better than many alternatives.

- Supports extraction of tables and metadata if needed for future enhancements.

python-docx:- Parses DOCX files to extract text from paragraphs and runs.

- Preserves document structure, allowing extraction of headings, bullet points, and lists.

- Optional: Libraries like

textractorpdfminer.sixcan be used but often require more configuration.

Detailed Example: Extracting Text from PDFs Using pdfplumber

pythonCopyimport pdfplumber

def extract_text_from_pdf(file_path):

extracted_text = ''

with pdfplumber.open(file_path) as pdf:

for page in pdf.pages:

# Extract text, ignore pages with no extractable content

page_text = page.extract_text()

if page_text:

extracted_text += page_text + '\n'

return extracted_text.strip()

This function opens the PDF file, iterates through all pages, and concatenates the extracted text. The strip() call removes any trailing whitespace.

Detailed Example: Extracting Text from DOCX Using python-docx

pythonCopyfrom docx import Document

def extract_text_from_docx(file_path):

doc = Document(file_path)

full_text = []

for para in doc.paragraphs:

# Append each paragraph's text to the list

if para.text:

full_text.append(para.text)

return '\n'.join(full_text)

This method reads the DOCX document paragraph by paragraph and joins them into a single string.

Handling Resume Uploads in Flask

Set up a secure and efficient file upload endpoint in your Flask app:

pythonCopyfrom flask import Flask, request, jsonify

import os

from werkzeug.utils import secure_filename

app = Flask(__name__)

UPLOAD_FOLDER = './uploads'

ALLOWED_EXTENSIONS = {'pdf', 'docx'}

app.config['UPLOAD_FOLDER'] = UPLOAD_FOLDER

def allowed_file(filename):

return '.' in filename and filename.rsplit('.', 1)[1].lower() in ALLOWED_EXTENSIONS

@app.route('/upload_resume', methods=['POST'])

def upload_resume():

if 'resume' not in request.files:

return jsonify({'error': 'No file part in the request'}), 400

file = request.files['resume']

if file.filename == '':

return jsonify({'error': 'No file selected'}), 400

if file and allowed_file(file.filename):

filename = secure_filename(file.filename)

save_path = os.path.join(app.config['UPLOAD_FOLDER'], filename)

file.save(save_path)

if filename.endswith('.pdf'):

text = extract_text_from_pdf(save_path)

else:

text = extract_text_from_docx(save_path)

# Proceed with AI analysis using 'text'

return jsonify({'extracted_text': text}), 200

else:

return jsonify({'error': 'Unsupported file type'}), 400

Key Points:

allowed_file()ensures only PDFs and DOCX files are accepted.secure_filename()sanitizes filenames to prevent security issues.- Uploaded files are saved temporarily before text extraction.

- Proper error handling provides clear feedback to users.

Additional Considerations for Production-Ready MVP

- File Size Limits: Enforce limits (e.g., max 5 MB) to prevent abuse and slow processing.

- Storage Cleanup: Delete uploaded files after processing to save disk space and maintain privacy.

- Security: Protect upload endpoints from malicious files with scanning or sandboxing.

- Multi-language Support: Consider libraries that can handle resumes in multiple languages if applicable.

- Text Normalization: Remove non-textual elements, fix encoding issues, and normalize whitespace before AI input.

- Asynchronous Processing: For large files or batch uploads, consider asynchronous tasks (Celery, RQ) to avoid blocking requests.

5. Step 2: Preparing the Job Description

The job description is the foundational reference that the AI-powered screener uses to evaluate candidate resumes. For the AI model to accurately assess how well a candidate fits a position, the job description must be clearly articulated and structured to provide precise, relevant context.

Breaking Down the Job Description

To ensure comprehensive evaluation, the job description should be segmented into clear, well-defined sections, including:

1. Role Overview

Provide a brief but specific summary of the role’s purpose and impact within the organization. This helps the AI understand the broader context of the position.

Example:

“Seeking a Software Developer responsible for designing scalable backend services and integrating cloud infrastructure.”

2. Technical Requirements

List all necessary technical skills and tools, specifying proficiency levels when possible. Distinguish between mandatory and desirable technologies.

Example:

- Required: Python (advanced), RESTful APIs, Docker, AWS

- Preferred: Kubernetes, Terraform, Jenkins, Agile methodologies

Specifying proficiency or years of experience helps the AI weigh candidates’ skills accurately.

3. Soft Skills and Competencies

Highlight interpersonal skills and attributes critical for success in the role, such as communication, problem-solving, or teamwork. While harder to quantify, including these guides the AI to look for relevant cues.

Example:

“Strong collaboration skills with experience working in cross-functional teams.”

4. Educational Background and Certifications

Detail degree requirements or professional certifications that may influence candidate ranking.

Example:

“Bachelor’s degree in Computer Science or related field preferred; AWS Certified Developer a plus.”

5. Responsibilities and Deliverables

Clarify the core duties and expected outcomes for the role, allowing the AI to match experience and achievements in resumes to job expectations.

Example:

“Develop and maintain microservices, optimize cloud infrastructure, and participate in code reviews.”

Structuring the Job Description for AI

Natural language models perform best when given clear, concise, and structured prompts. When preparing the job description for AI analysis:

- Use bullet points or numbered lists for clarity.

- Avoid vague language or overly complex sentences.

- Separate required and preferred qualifications explicitly.

- Provide examples or metrics where applicable (e.g., “Experience managing CI/CD pipelines with at least 2 years”).

Example Structured Job Description

Job Title: Backend Software Developer

Role Summary:

Design and implement scalable backend APIs and manage cloud deployments.

Technical Skills Required:

- Python (3+ years experience)

- RESTful API development

- Docker and container orchestration

- AWS cloud services

Preferred Skills:

- Kubernetes

- Infrastructure as Code (Terraform)

- CI/CD pipelines (Jenkins, GitHub Actions)

- Agile/Scrum methodologies

Soft Skills:

- Strong problem-solving skills

- Effective communication

- Team collaboration

Education & Certifications:

- Bachelor’s degree in Computer Science or related field

- AWS Certified Developer (preferred)

Responsibilities:

- Develop backend services and APIs

- Maintain cloud infrastructure and deployments

- Collaborate with frontend and DevOps teams

- Participate in code reviews and mentoring

Incorporating the Job Description into AI Prompts

The structured job description becomes part of the prompt sent to OpenAI’s API, providing context for each candidate evaluation. This allows the AI to perform semantic matching—understanding the meaning behind resume content and assessing suitability holistically.

Prompt template example:

prompt = f"""

You are an expert hiring manager. Evaluate the candidate's resume below against the job description.

Provide a summary of matched skills, relevant experience, and a suitability score from 0 to 100.

Job Description:

{job_description}

Candidate Resume:

{resume_text}

"""

By framing the prompt this way, the AI focuses on relevant criteria, reducing noise and improving consistency in scoring.

Why Proper Job Description Preparation Matters

- Improves AI Accuracy: Clear, detailed descriptions help the AI differentiate between qualified and unqualified candidates more effectively.

- Ensures Consistency: Structured input reduces variability in AI responses across candidates.

- Facilitates Fair Evaluation: Explicit criteria help avoid implicit bias and ensure objective candidate ranking.

- Makes Results Interpretable: AI-generated summaries tied to job requirements help recruiters understand scoring rationales.

6. Step 3: Integrating OpenAI API for Resume Analysis

Integrating the OpenAI API into your Flask application allows you to leverage advanced natural language processing capabilities to analyze and evaluate candidate resumes against your prepared job description. This step transforms raw text data into actionable insights by assessing candidate suitability, matching skills, and generating scores that help prioritize applicants.

Setting Up OpenAI API Access

Before making API calls, ensure you have:

- An OpenAI API key from your OpenAI account dashboard.

- The

openaiPython package installed via pip: bashCopypip install openai - Environment variables or secure methods to store your API key without hardcoding it into your codebase.

Example setup using environment variables:

import os

import openai

openai.api_key = os.getenv("OPENAI_API_KEY")

Crafting Effective Prompts for Resume Evaluation

The core of integrating OpenAI lies in constructing prompts that guide the model to produce useful, structured responses. The prompt should include:

- The job description (structured as prepared earlier).

- The extracted resume text for analysis.

- Clear instructions for the AI to evaluate skills, experience, and provide a suitability score.

Example prompt:

def create_prompt(job_description, resume_text):

return f"""

You are a professional hiring manager. Based on the following job description, evaluate the candidate's resume.

Job Description:

{job_description}

Candidate Resume:

{resume_text}

Please provide:

1. A summary of matched key skills.

2. Relevant experience highlights.

3. A suitability score from 0 to 100 indicating how well the candidate fits the role.

"""

Making the OpenAI API Call

Using the openai.Completion.create method with the text-davinci-003 engine (or your preferred model), you send the prompt and receive an analysis.

Sample code:

def analyze_resume_with_openai(job_description, resume_text):

prompt = create_prompt(job_description, resume_text)

response = openai.Completion.create(

engine="text-davinci-003",

prompt=prompt,

max_tokens=250,

temperature=0,

top_p=1,

frequency_penalty=0,

presence_penalty=0

)

analysis = response.choices[0].text.strip()

return analysis

Parsing AI Responses

The API response contains a natural language text summary with skills, experience, and a score. To automate candidate ranking, you’ll need to extract the numeric suitability score from this text. This can be done with simple regular expressions or text processing.

Example regex snippet:

import re

def extract_score(text):

match = re.search(r"score\s*[:\-]?\s*(\d+)", text, re.IGNORECASE)

if match:

return int(match.group(1))

return 0 # Default or fallback score

Integrating with Your Flask Endpoint

Once you have the resume text and job description, call the OpenAI API to get the analysis:

@app.route('/analyze_resume', methods=['POST'])

def analyze_resume():

data = request.json

job_description = data.get('job_description')

resume_text = data.get('resume_text')

if not job_description or not resume_text:

return jsonify({'error': 'Missing job description or resume text'}), 400

analysis = analyze_resume_with_openai(job_description, resume_text)

score = extract_score(analysis)

return jsonify({'analysis': analysis, 'score': score}), 200

Best Practices for API Integration

- Limit token usage by controlling prompt length and max tokens to manage costs.

- Use temperature=0 for consistent, deterministic responses.

- Implement retries and error handling to handle API rate limits or transient failures.

- Secure API keys using environment variables or vault services.

- Test and refine prompts iteratively to improve AI output relevance.

By integrating OpenAI API with thoughtfully crafted prompts, your Flask app can transform unstructured resume data into meaningful candidate evaluations, forming the core of your AI-powered screening MVP.

7. Step 4: Scoring and Ranking Candidates

How the Suitability Score is Calculated

When you send the candidate’s resume and the job description to the OpenAI API, the AI generates a natural language response that includes:

- A summary of the candidate’s matched skills

- Relevant experience highlights

- A numerical suitability score (usually from 0 to 100) indicating how well the candidate fits the job requirements

This score is explicitly requested in the prompt, so the AI returns it as part of its response.

Example prompt excerpt requesting the score:

Please provide:

1. A summary of matched key skills.

2. Relevant experience highlights.

3. A suitability score from 0 to 100 indicating how well the candidate fits the role.

Example AI response snippet:

Matched Skills: Python, REST APIs, Docker, AWS

Relevant Experience: 3 years developing backend services using AWS and Docker

Suitability Score: 85

Extracting the Score Programmatically

You use text processing techniques (e.g., regular expressions) to extract the numeric score from the AI’s response string.

Example Python code to extract the score:

import re

def extract_score(text):

# Search for "Suitability Score:" followed by a number (0-100)

match = re.search(r"Suitability Score\s*[:\-]?\s*(\d{1,3})", text, re.IGNORECASE)

if match:

score = int(match.group(1))

# Clamp score between 0 and 100

return max(0, min(score, 100))

return 0 # Default score if not found

Normalizing Scores

Since the AI score is already requested between 0 and 100, minimal normalization is needed. However, if you use multiple AI models or different scoring strategies, normalize all scores to a consistent 0-100 scale to allow fair ranking.

Ranking Candidates by Score

Once scores are extracted, you store each candidate’s score in your MySQL database. To generate a ranked list:

- Query candidates ordered by descending suitability score:

SELECT * FROM candidates ORDER BY score DESC, screened_at ASC;

- The second order by date (

screened_at ASC) breaks ties, prioritizing candidates screened earlier.

Why This Ranking Matters

- Candidates with higher scores are more likely to meet the job criteria and should be prioritized for interviews.

- Scores help recruiters quickly filter and shortlist without reading every resume manually.

- Combining score with metadata (e.g., experience years) improves decision-making in tie-breaker situations.

Handling Edge Cases

- Missing or ambiguous scores: If AI doesn’t provide a score, default to zero or flag for manual review.

- Multiple AI outputs: Aggregate scores (e.g., average) if you analyze resumes multiple times with different prompts or models.

Example: Full Flow for Scoring and Ranking

def process_and_rank_candidates(candidates, job_description, db_connection):

scored_candidates = []

for candidate in candidates:

text = extract_resume_text(candidate['file_path'])

analysis = analyze_resume_with_openai(job_description, text)

score = extract_score(analysis)

# Save to DB

save_candidate_to_db(db_connection, candidate['name'], text, analysis, score)

scored_candidates.append({'name': candidate['name'], 'score': score, 'analysis': analysis})

# Sort locally if needed

ranked = sorted(scored_candidates, key=lambda x: x['score'], reverse=True)

return ranked

This explicit scoring and ranking process makes your AI screener both transparent and actionable, empowering recruiters to efficiently identify top candidates.

8. Step 5: Building the Screening Pipeline

To create an efficient AI-powered job application screener MVP, it’s essential to automate the entire screening process—from file upload to candidate ranking—within a cohesive pipeline. This ensures scalability, minimizes manual intervention, and speeds up recruiter decision-making.

Automating the Workflow

The screening pipeline consists of these sequential stages:

- File Upload: Candidates submit resumes via the Flask web interface or API endpoint.

- File Validation: The system checks file type, size, and integrity.

- Text Extraction: Using

pdfplumberorpython-docx, resumes are converted into clean text. - AI Analysis: The extracted text and the job description are sent to the OpenAI API for evaluation.

- Score Extraction: The AI’s response is parsed to extract the candidate’s suitability score.

- Data Storage: Candidate information, analysis, and scores are stored in the MySQL database.

- Ranking & Presentation: Candidates are sorted by score and presented to recruiters via a basic HTML/CSS/JS dashboard.

Designing the Pipeline in Flask

Each stage corresponds to a function or API route within your Flask application. Here’s a simplified flow:

@app.route('/submit_application', methods=['POST'])

def submit_application():

# Step 1 & 2: Receive and validate file upload

file = request.files['resume']

if not allowed_file(file.filename):

return jsonify({'error': 'Unsupported file type'}), 400

filename = secure_filename(file.filename)

file_path = os.path.join(app.config['UPLOAD_FOLDER'], filename)

file.save(file_path)

# Step 3: Extract text

resume_text = extract_text(file_path)

# Step 4: AI analysis

job_description = get_current_job_description()

ai_response = analyze_resume_with_openai(job_description, resume_text)

# Step 5: Extract score

score = extract_score(ai_response)

# Step 6: Store data

store_candidate_data(filename, resume_text, ai_response, score)

return jsonify({'message': 'Application processed', 'score': score}), 200

Handling Batch Processing

For multiple applications, implement batch processing to queue and process resumes asynchronously using tools like Celery or RQ. This prevents blocking web requests and improves responsiveness.

Rate Limiting and Cost Control

Since OpenAI API usage may incur costs and rate limits:

- Implement request throttling and retries.

- Cache repeated requests when possible.

- Optimize prompt length and token usage to reduce API calls.

Frontend Integration

The frontend (built with basic HTML, CSS, and JS) polls or queries the backend for updated ranked candidate lists, displaying:

- Candidate names

- Suitability scores

- Key skill highlights from AI analysis

- Links to full resume or detailed AI report

This simple interface allows recruiters to quickly identify top applicants.

Summary of Pipeline Benefits

- Automation: Eliminates manual screening bottlenecks.

- Scalability: Supports growing applicant volumes.

- Consistency: Standardizes candidate evaluation.

- Transparency: Stores AI feedback alongside candidate data for review.

9. Step 6: Presenting Results to Recruiters

Presenting the screening results in a clear and actionable way is crucial for recruiter adoption and efficient decision-making. Since this MVP uses basic HTML, CSS, and JavaScript, focus on simplicity, usability, and clarity.

Key Elements to Display

- Candidate Name: Easily identifiable label for each applicant.

- Suitability Score: Prominently displayed score (0-100) indicating fit to the job.

- Matched Skills Summary: AI-generated summary highlighting key skills and experiences relevant to the job description.

- Detailed Analysis Link: Option to view the full AI-generated text analysis or candidate resume.

- Sorting and Filtering: Ability to sort candidates by score or filter by criteria like score thresholds.

Sample HTML Structure

<table id="candidateTable">

<thead>

<tr>

<th>Name</th>

<th>Suitability Score</th>

<th>Key Skills</th>

<th>Details</th>

</tr>

</thead>

<tbody>

<!-- Rows populated dynamically -->

</tbody>

</table>

Basic JavaScript for Dynamic Data Rendering

Fetch candidate data from your Flask backend API and populate the table:

fetch('/api/get_ranked_candidates')

.then(response => response.json())

.then(data => {

const tbody = document.querySelector('#candidateTable tbody');

tbody.innerHTML = '';

data.forEach(candidate => {

const row = document.createElement('tr');

row.innerHTML = `

<td>${candidate.name}</td>

<td>${candidate.score}</td>

<td>${candidate.key_skills}</td>

<td><button onclick="showDetails(${candidate.id})">View</button></td>

`;

tbody.appendChild(row);

});

});

function showDetails(candidateId) {

// Fetch and display detailed analysis in a modal or separate page

}

Backend Endpoint Example for Fetching Candidates

@app.route('/api/get_ranked_candidates', methods=['GET'])

def get_ranked_candidates():

cursor = db_connection.cursor(dictionary=True)

cursor.execute("SELECT id, name, score, analysis FROM candidates ORDER BY score DESC")

results = cursor.fetchall()

# Extract key skills from analysis for display, or parse stored summary

for candidate in results:

candidate['key_skills'] = extract_key_skills(candidate['analysis'])

return jsonify(results)

User Experience Considerations

- Highlight top candidates visually (e.g., color coding scores above a threshold).

- Allow recruiters to search/filter by score or skill keywords.

- Ensure mobile responsiveness for accessibility on various devices.

- Provide export options (CSV or PDF) for reporting or sharing.

Benefits of This Presentation Approach

- Quick Insights: Recruiters see scores and matched skills upfront.

- Transparency: Detailed AI analysis is just a click away for deeper review.

- Ease of Use: Minimalistic design reduces training time and encourages adoption.

- Flexibility: Basic frontend can be extended or integrated with existing ATS systems.

10. Best Practices and Tips for Improving the Screener

Building a minimum viable product (MVP) for an AI-powered job application screener is just the start. To enhance its accuracy, reliability, and usability, keep these best practices and tips in mind as you iterate and expand your solution:

1. Refine Your Prompts Continuously

The quality of AI responses depends heavily on prompt design. Experiment with prompt wording, structure, and examples to guide the AI toward consistent, actionable outputs. Consider including positive and negative examples within the prompt for better context.

2. Limit Prompt and Response Length

To control costs and API usage, keep prompts concise and set reasonable limits on maximum tokens in the API request. Trim unnecessary text from resumes before sending, focusing on relevant sections like skills and experience.

3. Handle Edge Cases and Errors Gracefully

Implement robust error handling for API failures, timeouts, or unexpected responses. If the AI fails to return a score or analysis, fall back to manual review or default scores to avoid blocking the screening process.

4. Incorporate Human-in-the-Loop Feedback

Allow recruiters to provide feedback on AI evaluations, flag errors, or override scores. Use this data to retrain or fine-tune your AI model, gradually improving screening accuracy over time.

5. Ensure Data Privacy and Compliance

Candidate data is sensitive. Use secure storage, encrypted communication, and comply with regulations like GDPR and CCPA. Inform candidates about AI usage and get consent when necessary.

6. Optimize for Scalability

As application volume grows, consider implementing asynchronous processing queues (e.g., Celery with Redis) to handle file uploads, extraction, and AI calls without slowing down user experience.

7. Integrate with Applicant Tracking Systems (ATS)

Plan for integration with existing ATS platforms to avoid disrupting recruitment workflows. APIs and webhooks can help synchronize candidate data, scores, and statuses seamlessly.

8. Regularly Update Job Descriptions and Screening Criteria

Job requirements evolve; keep job descriptions and screening parameters current to maintain AI relevance. Automate updates where possible to reduce manual overhead.

9. Monitor AI Bias and Fairness

Periodically audit AI screening outcomes to detect and mitigate bias related to gender, ethnicity, age, or other protected attributes. Consider augmenting AI decisions with fairness-aware models or additional human review.

10. Enhance Candidate Experience

Communicate clearly with applicants about AI involvement. Provide timely status updates and personalize messages to maintain positive candidate engagement.

By following these best practices, your AI-powered job application screener will become more reliable, efficient, and trusted by both recruiters and candidates.

11. External Resources

To deepen your understanding and explore additional tools and concepts related to building AI-powered job application screeners, here are some valuable external resources:

OpenAI Documentation

- OpenAI API Reference — Official docs covering API endpoints, usage examples, and best practices.

Resume Parsing Tools and Libraries

- pdfplumber GitHub Repository — Advanced PDF text extraction library used for resume parsing.

- python-docx Documentation — Official docs for working with Microsoft Word documents in Python.

AI in Recruitment

- AI for Hiring — What’s Possible Today? — Article discussing AI applications and challenges in recruitment.

- Harvard Business Review: How AI is Changing Hiring — Insightful look at AI’s impact on recruitment processes.

Flask Framework

- Flask Official Documentation — Comprehensive guide to building web apps and APIs with Flask.

Candidate Experience and Fairness

- AI Fairness in Hiring: Principles and Practices — Research papers and discussions on ethical AI use in hiring.

Internal Link for Further Learning

For practical guidance on working with OpenAI API in Python, check out our detailed tutorial: How to Use OpenAI O1 and O1-Mini.

Conclusion

Building an AI-powered job application screener with the OpenAI API and Flask empowers recruiters to streamline their hiring process, reduce manual workload, and make smarter, data-driven decisions. By leveraging natural language processing for resume parsing, candidate evaluation, and automated scoring, your screener can rapidly analyze large applicant pools with accuracy and consistency.

This step-by-step guide has shown how to extract text from resumes, prepare structured job descriptions, integrate with OpenAI’s models, and present ranked candidates in a user-friendly interface. With thoughtful prompt engineering, structured architecture, and a focus on scalability, you can develop a reliable and extensible MVP that transforms how you approach hiring.

As you continue refining your solution, remember to:

- Incorporate recruiter feedback,

- Prioritize data privacy,

- Monitor for bias, and

- Continuously optimize your prompts and workflows.

AI in recruitment is no longer futuristic—it’s actionable today. Start with an MVP, iterate fast, and scale with confidence.

Comments