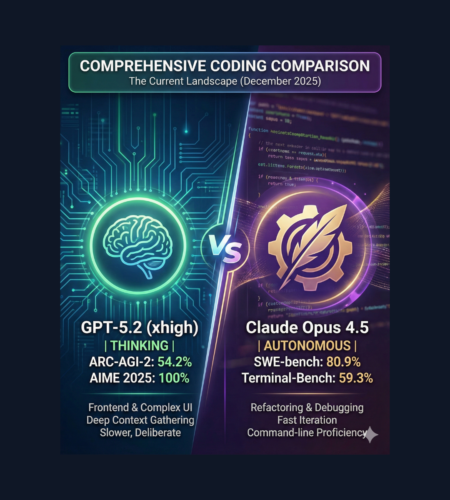

GPT-5.2 (xhigh) and Claude Opus 4.5 are the two strongest coding-capable language models available today, with nearly identical performance on core coding benchmarks but clear differences in reasoning style, speed, and developer workflows.

Claude Opus 4.5 excels at refactoring, debugging, and terminal-centric work, while GPT-5.2 shines in frontend development, mathematical reasoning, and complex multi-tool workflows.

This article compares both models using benchmarks, real developer feedback, pricing, and practical coding use cases to help you decide which model fits your workflow best.

TL;DR (For Google SGE & AI Search)

TL;DR: Claude Opus 4.5 is faster and stronger at refactoring, debugging, and terminal workflows, while GPT-5.2 (xhigh) excels at frontend development, abstract reasoning, and math-heavy coding tasks. Both score ~80% on SWE-bench, making them nearly tied for core coding performance.

What are GPT-5.2 (xhigh) and Claude Opus 4.5?

GPT-5.2 (xhigh) is OpenAI’s latest high-deliberation coding and reasoning model, designed to explore codebases, gather context, and reason deeply before writing code.

Claude Opus 4.5 is Anthropic’s flagship model, optimized heavily for coding tasks, long-context understanding, and fast iteration.

As of December 2025, the broader AI landscape includes Google’s Gemini 3 topping LMArena across many benchmarks—but coding remains the tightest race, with Claude Opus 4.5 and GPT-5.2 effectively trading wins.

Which model performs better on coding benchmarks?

Benchmark Summary:

Claude Opus 4.5 narrowly leads on SWE-bench and terminal-focused evaluations, while GPT-5.2 dominates abstract reasoning, mathematics, science, and professional task benchmarks. Overall coding performance is nearly tied.

| Benchmark | Claude Opus 4.5 | GPT-5.2 | Winner |

|---|---|---|---|

| SWE-bench Verified | 80.9% | 80.0% | Claude (slightly) |

| Terminal-Bench 2.0 | 59.3% | ~47.6% | Claude |

| ARC-AGI-2 (Reasoning) | 37.6% | 52.9–54.2% | GPT-5.2 |

| AIME 2025 (Math) | ~92.8% | 100% | GPT-5.2 |

| GPQA Diamond (Science) | ~87% | 93.2% | GPT-5.2 |

| GDPval (Professional Tasks) | 59.6% | 70.9% | GPT-5.2 |

Claude Opus 4.5 became the first model to break 80% on SWE-bench, while GPT-5.2 effectively matches it, confirming parity on core coding ability.

What do real developers say about GPT-5.2 vs Claude Opus?

Pro-Claude Opus 4.5 Sentiment

Many developers describe Claude as more “code-native” and faster for day-to-day work.

- “Claude and Claude Code in general is miles better than ChatGPT and Codex… Claude is basically all code optimized.”

- “Opus 4.5 beats 5.2 by a hundred points at least on LM Arena.”

- “For my data engineering tasks, Claude Sonnet and Opus outperformed 5.2 without a doubt.”

Pro-GPT-5.2 Sentiment

GPT-5.2 earns praise for deep debugging, tracing, and context awareness.

- “Fixed a complex data discrepancy bug that Opus 4.5 couldn’t fix in one shot.”

- “5.2 xhigh gets the context and implementation right every time.”

- “It’s super good at tracing bugs.”

The consensus: neither model is universally better, but each excels in different scenarios.

Where does Claude Opus 4.5 perform best?

Claude Opus 4.5 consistently performs best in:

- Refactoring and large-scale code cleanup

- Debugging existing codebases

- Terminal and command-line workflows

- Long-horizon autonomous coding tasks

- Rapid iteration and quick questions

Claude often begins writing code immediately, which can accelerate workflows—but occasionally leads to premature assumptions.

Where does GPT-5.2 (xhigh) perform best?

GPT-5.2 stands out in:

- Frontend and UI-heavy development

- Abstract reasoning and complex logic

- Math-heavy and algorithmic tasks

- Multi-file, multi-tool workflows

- Careful context exploration before coding

Unlike Claude, GPT-5.2 tends to read files, explore the codebase, and ask clarifying questions before making changes, which improves accuracy for complex systems.

How do speed and latency compare between the models?

Speed is one of the biggest practical differences.

- Claude Opus 4.5 is generally faster and more responsive for short prompts and quick iterations.

- GPT-5.2 Thinking / xhigh is noticeably slower, especially for simple tasks, due to deeper reasoning and context gathering.

That said, both models experience occasional latency issues depending on load, as reported by users.

How do GPT-5.2 and Claude Opus compare by programming language?

Based on developer feedback:

- Swift: Mixed results; Opus excels at one-shot tasks, GPT-5.2 handles edge cases better

- Python / Rust / Golang: GPT-5.2 produces cleaner code and suggests better typing

- TypeScript: GPT-5.2 consistently outperforms Claude

- Shell / CLI scripting: Claude Opus leads

Which model should you choose for coding?

Choose Claude Opus 4.5 if you:

- Do heavy refactoring or debugging

- Need fast iteration cycles

- Work extensively in terminals or CLI tools

- Run long-horizon autonomous coding tasks

- Prefer a model that dives in immediately

Choose GPT-5.2 (xhigh) if you:

- Build complex frontend or UI applications

- Need strong mathematical or abstract reasoning

- Work across large, multi-file codebases

- Want thorough context gathering before coding

- Don’t mind slower responses for higher deliberation

Many experienced developers now use both models together—for example, Claude for coding and GPT-5.2 for reviews or deep debugging.

Pricing Comparison

| Model | Input (per 1M tokens) | Output (per 1M tokens) |

|---|---|---|

| Claude Opus 4.5 | $5 | $25 |

| GPT-5.2 Thinking | $1.75 | $14 |

| GPT-5.2 Pro | ~$21 | Higher |

GPT-5.2 Pro is significantly more expensive, often costing more than four times Claude Opus for comparable usage.

Frequently Asked Questions

Which is better for coding: GPT-5.2 or Claude Opus 4.5?

Both are nearly tied on core coding benchmarks. Claude is better for refactoring and debugging, while GPT-5.2 excels in frontend and reasoning-heavy tasks.

Is Claude Opus still the best coding model?

Claude Opus 4.5 remains one of the best coding models available, though GPT-5.2 has closed the gap significantly.

Why is GPT-5.2 slower than Claude?

GPT-5.2 performs deeper reasoning and context exploration before writing code, increasing latency but improving accuracy.

Can developers benefit from using both models?

Yes. Many developers alternate between Claude and GPT-5.2 depending on the task.

Final Takeaway

GPT-5.2 (xhigh) and Claude Opus 4.5 are extremely close in coding ability, each excelling in different areas.

Claude Opus offers faster iteration and superior refactoring, while GPT-5.2 delivers stronger reasoning and frontend capabilities.

For serious developers, the most effective approach is often using both models strategically rather than choosing just one.

Comments