On September 12, 2024, OpenAI launched a groundbreaking new series of models called o1. These models represent a major step forward in artificial intelligence, specifically in solving complex reasoning tasks across science, coding, and math. Designed to think more deeply before providing an answer, the o1 series includes two versions: o1-preview and o1-mini. Each is tailored for specific needs, balancing power and cost-efficiency. Let’s dive into what makes the o1 series so unique, how it works, and how it compares to previous models like GPT-4o.

What is the OpenAI o1 Model?

The o1 series is OpenAI’s next leap in artificial intelligence, designed to reason more effectively through problems. Traditional models like GPT-4o are great at generating human-like text, but they often struggle with truly complex, multi-step reasoning tasks. The o1 models, on the other hand, are trained to spend more time thinking before answering, making them much more powerful for tackling difficult problems.

The o1 series is focused on:

- Mathematics: Handling problems from basic math to high-level competition problems like those in the International Mathematics Olympiad (IMO).

- Coding: Generating, understanding, and debugging complicated code for a variety of tasks.

- Science: Solving tough questions in physics, chemistry, biology, and more.

How Does the o1 Model Work?

Unlike previous models, o1 has been trained to think in steps, similar to how a person would break down a complicated problem. This involves:

- Chain-of-Thought Reasoning: The model uses an internal process to refine its approach before arriving at a solution, improving the accuracy and depth of responses.

- Error Correction: The model can recognize when it makes a mistake and adjust its strategy accordingly, leading to better overall performance.

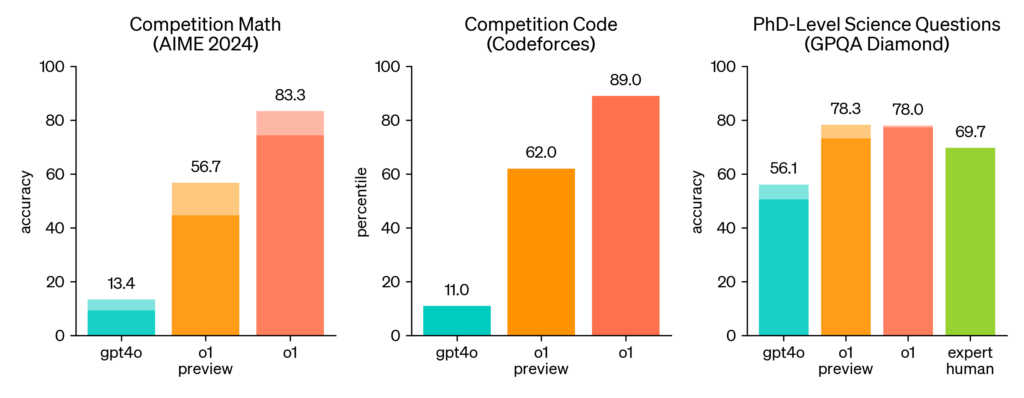

For instance, in tests like the International Mathematics Olympiad (IMO) qualifier, GPT-4o could only solve 13% of problems, while o1 solved a remarkable 83% of the same problems. In coding competitions like Codeforces, the o1 model scored in the top 11% of participants.

Comparing o1-preview and o1-mini: Power vs. Efficiency

The o1 series includes two versions—o1-preview and o1-mini—each serving different needs based on the complexity of tasks and computational resources.

1. o1-preview: Powerhouse for Complex Reasoning

The o1-preview model is the full-fledged version of OpenAI’s latest reasoning engine. It excels in tasks that require deep thinking and multi-step solutions, making it ideal for:

- Researchers in science, who need to solve advanced problems like those in quantum physics or molecular biology.

- Mathematicians tackling complex formulas and problems, such as those found in the IMO.

- Developers working on highly intricate code with multiple dependencies.

Strengths of o1-preview:

- Superior Performance: In tasks like the International Mathematics Olympiad, o1-preview solved 83% of the problems, compared to GPT-4o’s 13%. For high-school level coding challenges on Codeforces, it ranked in the 89th percentile.

- Advanced Reasoning: Whether it’s generating step-by-step solutions in coding or scientific research, o1-preview has the ability to handle highly sophisticated tasks.

Limitations:

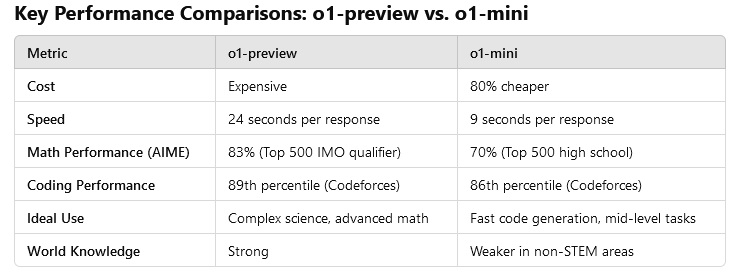

- Cost and Speed: While o1-preview is incredibly powerful, it’s more expensive and takes longer to respond due to the increased computational cost. For many applications, this could be overkill, particularly when only basic reasoning or less complex tasks are required.

2. o1-mini: Fast, Efficient, and Cost-Effective

For users who need reasoning capabilities but can’t afford the cost or speed of o1-preview, OpenAI offers o1-mini. This smaller model is 80% cheaper to run and responds much faster, making it ideal for:

- Developers looking to debug or generate code quickly without requiring deep knowledge of other fields.

- Students or educators needing assistance in solving math or science problems without the high cost associated with larger models.

- Companies looking for fast, smart solutions in areas like cybersecurity, where quick and accurate problem-solving is essential.

Strengths of o1-mini:

- Cost-Efficiency: o1-mini costs just 20% of what o1-preview costs, making it far more affordable for regular use.

- Faster Response Times: While o1-preview can take upwards of 24 seconds to generate a response, o1-mini is much quicker, delivering answers in around 9 seconds, making it suitable for applications requiring fast turnarounds.

- Competitive Reasoning Ability: Despite being a smaller version, o1-mini performs impressively. It solved 70% of the problems in the AIME math competition, placing it among the top 500 high-school math students in the U.S. It also ranked in the top 86% of programmers on Codeforces, making it a strong choice for coding tasks.

Limitations of o1-mini:

- Less World Knowledge: While o1-mini excels in tasks that require reasoning, it struggles with more general knowledge questions or tasks outside of STEM fields. For instance, it doesn’t perform as well as o1-preview or GPT-4o when handling non-technical questions or factual knowledge.

Safety: A Top Priority

One of the most critical aspects of the o1 model series is its enhanced safety measures. OpenAI has implemented a new safety training method, enabling the o1 models to reason about their safety guidelines, applying them more effectively. This means that the model is better at:

- Detecting harmful content: o1-preview scored 84 out of 100 on a test designed to measure how well it avoids answering unsafe or inappropriate requests. By comparison, GPT-4o scored just 22.

- Resisting Jailbreak Attempts: Users trying to get around safety rules (known as “jailbreaking”) will find o1 much more resistant than previous models.

OpenAI has also partnered with U.S. and U.K. AI Safety Institutes, granting them early access to test and evaluate the models’ safety performance, ensuring rigorous checks before releasing the models to the public.

How to Access the OpenAI o1 Series

Here’s how different groups can access the o1-preview and o1-mini models:

- ChatGPT Plus and Team Users: Can access the o1-preview and o1-mini starting today. Weekly usage limits are 30 messages for o1-preview and 50 messages for o1-mini.

- ChatGPT Enterprise and Edu Users: Both models will be available next week.

- API Users: Developers in API usage tier 5 can start using the models today with a rate limit of 20 RPM (requests per minute). The API currently lacks certain features like function calling and streaming, but OpenAI is working to improve this.

OpenAI is also planning to bring o1-mini access to ChatGPT Free users in the future.

What’s Next?

This is just the beginning for the o1 series. OpenAI plans to:

- Add browsing capabilities and file/image uploads, making the models more versatile.

- Continue to improve both the o1 series and the GPT series of models, ensuring that users have access to AI systems that meet a variety of needs—from broad world knowledge to specialized reasoning tasks.

Conclusion

The OpenAI o1 model series represents a new era of AI capable of solving highly complex problems in areas like science, coding, and math. With o1-preview, users have access to one of the most advanced reasoning models available, while o1-mini offers a faster, more cost-effective solution for simpler but still challenging tasks.

Whether you’re a researcher, developer, or student, the o1 series opens up new possibilities for solving problems that were once out of reach for AI. And with ongoing improvements, the future of AI reasoning looks brighter than ever.

Comments