In this blog, we’ll walk through the process of building a Mini Research Assistant using Flask and the Perplexity API. The app will allow users to generate research insights based on their queries, choose between different Sonar models, and enjoy robust error handling. By the end of this guide, you will have a fully functional web application to assist with generating relevant research content and evaluating its quality using perplexity scores.

Let’s dive in!

Why Build a Research Assistant with Flask and Perplexity API?

Creating a Mini Research Assistant using Flask and the Perplexity API is an excellent way to leverage the power of AI for research. Flask, a lightweight web framework in Python, enables rapid development of web applications. Perplexity API, on the other hand, allows us to integrate state-of-the-art language models to generate AI-driven research insights based on user queries.

With this combination, users can:

- Submit their research questions or topics.

- Select from multiple Sonar models.

- Receive AI-generated content along with a perplexity score to evaluate the quality of the response.

This blog covers how to build this interactive research assistant and allows users to customize their experience by selecting different models.

Step 1: Setting Up Your Flask Environment

Let’s begin by setting up the Flask environment, which will host the backend of the Mini Research Assistant application. You’ll also need the requests library to interact with the Perplexity API.

- Create a Virtual Environment:

python3 -m venv venv

source venv/bin/activate # On Windows: `venv\Scripts\activate`2. Install Flask and Requests:

pip install Flask requests3. Get Your Perplexity API Key:

Sign up for an account on Perplexity and obtain an API key. You will use this key to authenticate requests from your Flask app to the Perplexity API.

For a detailed guide on how to generate your Perplexity API key and make your first API call, check out our comprehensive tutorial: Perplexity API Tutorial: Key Generation & First API Call

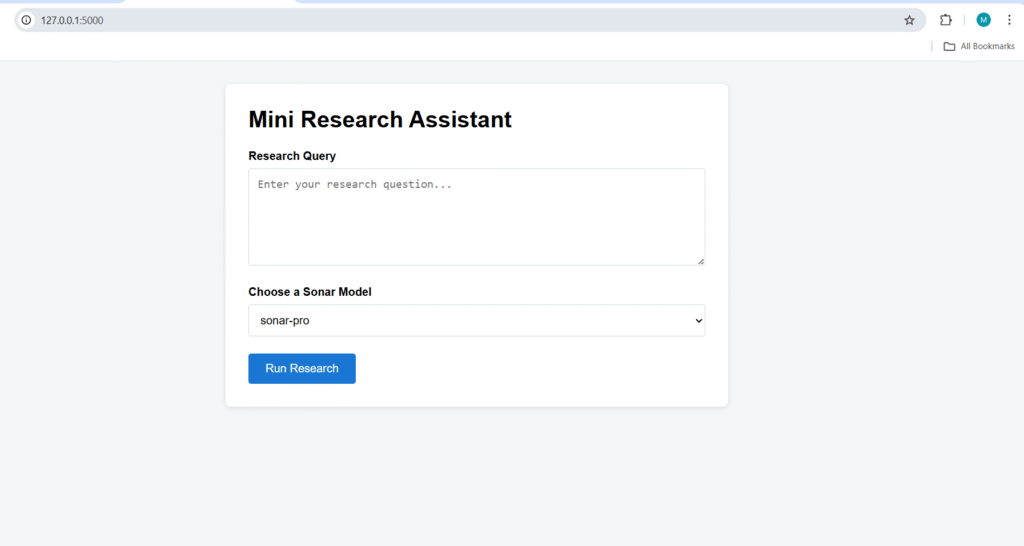

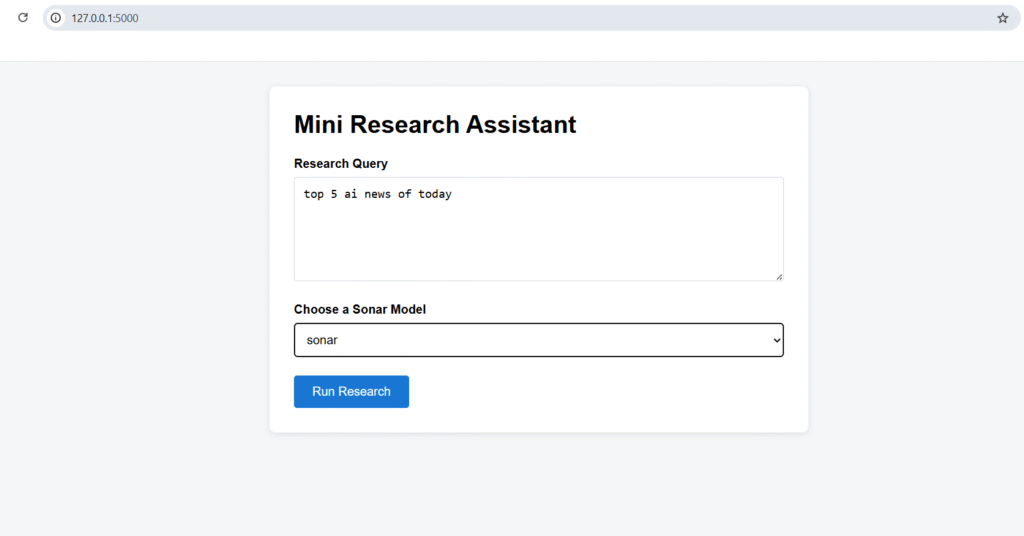

Step 2: Allow Users to Choose Between Different Sonar Models

In our Mini Research Assistant, we want to let users choose between multiple Sonar models available through the Perplexity API. These models could be:

- sonar-pro

- sonar

- sonar-deep-research

- sonar-reasoning-pro

- sonar-reasoning

Let’s allow users to select one of these models to generate research insights tailored to their needs.

1. Frontend (HTML) (index.html)

Here’s the updated HTML dropdown for model selection, where users can choose between the different Sonar models:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<title>Mini Research Assistant</title>

<!-- Basic styling keeps the interface clear without external dependencies. -->

<style>

body {

font-family: Arial, sans-serif;

margin: 0;

padding: 2rem;

background-color: #f4f6f8;

}

main {

max-width: 640px;

margin: 0 auto;

background-color: #fff;

border-radius: 8px;

padding: 2rem;

box-shadow: 0 2px 8px rgba(0, 0, 0, 0.1);

}

h1 {

margin-top: 0;

}

label {

display: block;

font-weight: bold;

margin-top: 1.5rem;

margin-bottom: 0.5rem;

}

textarea,

select {

width: 100%;

box-sizing: border-box;

padding: 0.75rem;

font-size: 1rem;

border: 1px solid #cfd8dc;

border-radius: 4px;

}

button {

margin-top: 1.5rem;

padding: 0.75rem 1.5rem;

font-size: 1rem;

background-color: #1976d2;

color: #fff;

border: none;

border-radius: 4px;

cursor: pointer;

}

button:hover {

background-color: #0d47a1;

}

</style>

</head>

<body>

<main>

<h1>Mini Research Assistant</h1>

<!-- The form posts the research query and selected model back to the Flask server. -->

<form action="{{ url_for('research') }}" method="post">

<label for="query">Research Query</label>

<textarea

id="query"

name="query"

rows="6"

placeholder="Enter your research question..."

required

></textarea>

<label for="model">Choose a Sonar Model</label>

<select id="model" name="model" required>

<!-- Populate the dropdown with the models supplied by the Flask route. -->

{% for model in models %}

<option value="{{ model }}">{{ model }}</option>

{% endfor %}

</select>

<button type="submit">Run Research</button>

</form>

</main>

</body>

</html>

2. Flask Backend

Now, we need to ensure that the Flask backend handles the selected model and passes it to the Perplexity API for content generation.

Here’s the updated Flask backend code (app.py):

import os

from typing import Any, Dict, Optional

import requests

from flask import Flask, render_template, request

# Initialize the Flask application so it can serve the form and results pages.

app = Flask(__name__)

# Models that the user can pick from the UI when submitting a research query.

AVAILABLE_MODELS = [

"sonar-pro",

"sonar",

"sonar-deep-research",

"sonar-reasoning-pro",

"sonar-reasoning",

]

# Base configuration for talking to the Perplexity API.

# PERPLEXITY_ENDPOINT = "https://api.perplexity.ai/v1/query"

PERPLEXITY_ENDPOINT = "https://api.perplexity.ai/chat/completions"

def extract_generated_text(payload: Dict[str, Any]) -> Optional[str]:

"""Attempt to locate the generated text inside the Perplexity API payload."""

if not isinstance(payload, dict):

return None

# Common schema where the response contains a top-level text field.

text = payload.get("text")

if isinstance(text, str) and text.strip():

return text.strip()

# Perplexity query endpoint often returns "final_output" for the main answer.

final_output = payload.get("final_output")

if isinstance(final_output, str) and final_output.strip():

return final_output.strip()

# Handle chat-completions alike responses with choices and message content.

choices = payload.get("choices")

if isinstance(choices, list):

for choice in choices:

if not isinstance(choice, dict):

continue

message = choice.get("message")

if isinstance(message, dict):

content = message.get("content")

if isinstance(content, str) and content.strip():

return content.strip()

choice_text = choice.get("text")

if isinstance(choice_text, str) and choice_text.strip():

return choice_text.strip()

# Some variants return an "output" field that can be either string or list.

output = payload.get("output")

if isinstance(output, str) and output.strip():

return output.strip()

if isinstance(output, list):

text_chunks = [chunk.strip() for chunk in output if isinstance(chunk, str) and chunk.strip()]

if text_chunks:

return "\n\n".join(text_chunks)

return None

def extract_perplexity_score(payload: Dict[str, Any]) -> Optional[float]:

"""Attempt to locate the perplexity score inside the Perplexity API payload."""

if not isinstance(payload, dict):

return None

# Direct perplexity score at the root of the payload.

score = payload.get("perplexity_score")

if isinstance(score, (int, float)):

return float(score)

# Some responses tuck the score under metrics or nested structures.

metrics = payload.get("metrics")

if isinstance(metrics, dict):

metric_score = metrics.get("perplexity")

if isinstance(metric_score, (int, float)):

return float(metric_score)

choices = payload.get("choices")

if isinstance(choices, list):

for choice in choices:

if not isinstance(choice, dict):

continue

nested_score = choice.get("perplexity") or choice.get("perplexity_score")

if isinstance(nested_score, (int, float)):

return float(nested_score)

return None

@app.route("/", methods=["GET"])

def index() -> str:

"""Render the homepage with the research form."""

return render_template("index.html", models=AVAILABLE_MODELS)

@app.route("/research", methods=["POST"])

def research() -> str:

"""Process a research request by forwarding it to the Perplexity API."""

# Capture the user's inputs and sanitize whitespace.

query = (request.form.get("query") or "").strip()

model = (request.form.get("model") or "").strip()

# Prepare default values for the template rendering.

generated_text: Optional[str] = None

perplexity_score: Optional[float] = None

error_message: Optional[str] = None

if not query:

error_message = "Please enter a research query."

elif model not in AVAILABLE_MODELS:

error_message = "Please select a valid Sonar model."

# Only proceed when the form submission passes validation checks.

if error_message is None:

api_key = os.environ.get("PERPLEXITY_API_KEY")

if not api_key:

error_message = "The Perplexity API key is not configured. Set PERPLEXITY_API_KEY in the environment."

else:

headers = {

"Authorization": f"Bearer {api_key}",

"Content-Type": "application/json",

}

payload = {

"model": model,

"query": query,

"messages": [

{"role": "system", "content": "You are a helpful AI research assistant."},

{"role": "user", "content": query},

],

}

try:

response = requests.post(

PERPLEXITY_ENDPOINT,

json=payload,

headers=headers,

timeout=30,

)

response.raise_for_status()

response_payload = response.json()

generated_text = extract_generated_text(response_payload)

perplexity_score = extract_perplexity_score(response_payload)

# Provide a friendly message when the response structure is unexpected.

if generated_text is None:

error_message = "The Perplexity API returned a response, but no generated text was found."

except requests.exceptions.HTTPError:

# When the API responds with an error status code, surface the message if available.

try:

error_payload = response.json()

api_error = None

if isinstance(error_payload, dict):

api_error = error_payload.get("error")

if isinstance(api_error, dict):

api_error = (

api_error.get("message")

or api_error.get("code")

or api_error.get("type")

or api_error.get("detail")

)

if isinstance(api_error, str) and api_error.strip():

error_message = (

f"The Perplexity API returned an error ({response.status_code}): {api_error.strip()}"

)

else:

error_message = (

f"The Perplexity API returned an error ({response.status_code}). "

"See server logs for more details."

)

app.logger.error(

"Perplexity API error (%s): %s", response.status_code, response.text.strip()

)

except ValueError:

# Fall back to the raw response text when the body is not JSON.

error_message = (

f"The Perplexity API returned an error ({response.status_code}): {response.text.strip()}"

)

app.logger.error(

"Perplexity API non-JSON error (%s): %s", response.status_code, response.text.strip()

)

except requests.exceptions.Timeout:

error_message = "The request to the Perplexity API timed out. Please try again."

app.logger.error("Perplexity API request timed out for model '%s'", model)

except requests.exceptions.RequestException as exc:

error_message = f"An error occurred while contacting the Perplexity API: {exc}"

app.logger.exception("Perplexity API request exception: %s", exc)

except ValueError:

# This block catches response.json() decoding issues.

error_message = "Received an unexpected response format from the Perplexity API."

app.logger.exception("Failed to decode Perplexity API response as JSON.")

return render_template(

"results.html",

query=query,

generated_text=generated_text,

perplexity_score=perplexity_score,

error_message=error_message,

)

if __name__ == "__main__":

# Enable debug mode for local development, making it easier to inspect issues.

app.run(debug=True)

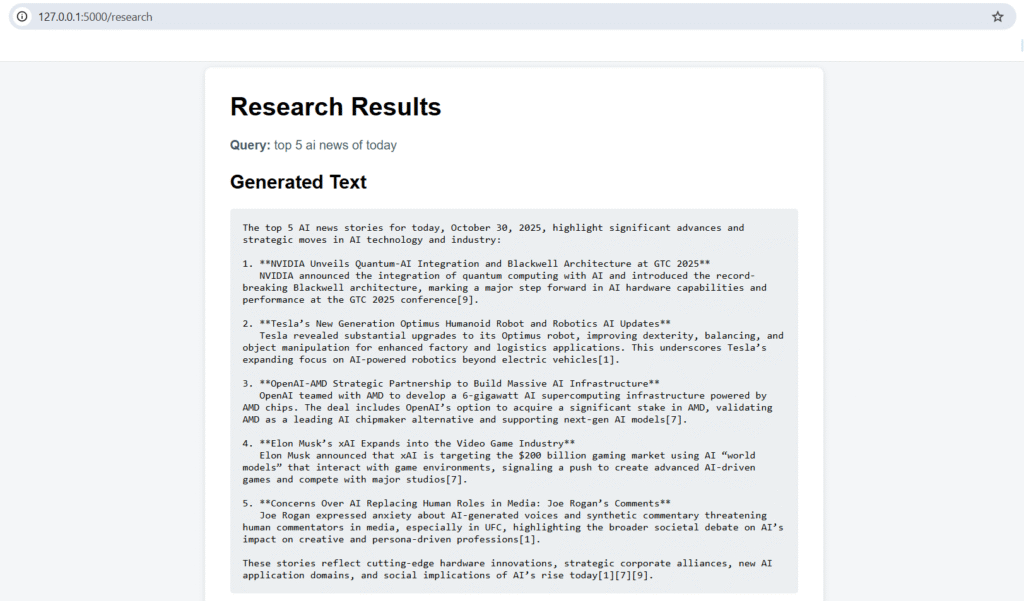

3. Result (result.html)

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<title>Research Results</title>

<!-- Mirror the styling from the form page for a consistent experience. -->

<style>

body {

font-family: Arial, sans-serif;

margin: 0;

padding: 2rem;

background-color: #f4f6f8;

}

main {

max-width: 720px;

margin: 0 auto;

background-color: #fff;

border-radius: 8px;

padding: 2rem;

box-shadow: 0 2px 8px rgba(0, 0, 0, 0.1);

}

h1 {

margin-top: 0;

}

.meta {

margin-bottom: 1.5rem;

color: #455a64;

}

.error {

padding: 1rem;

background-color: #ffebee;

border: 1px solid #ef5350;

color: #c62828;

border-radius: 4px;

margin-bottom: 1.5rem;

}

pre {

background-color: #eceff1;

padding: 1rem;

border-radius: 4px;

white-space: pre-wrap;

word-wrap: break-word;

}

a {

display: inline-block;

margin-top: 1.5rem;

color: #1976d2;

text-decoration: none;

}

a:hover {

text-decoration: underline;

}

</style>

</head>

<body>

<main>

<h1>Research Results</h1>

<!-- Present the query back to the user so the context remains visible. -->

<p class="meta"><strong>Query:</strong> {{ query or "Unknown query" }}</p>

{% if error_message %}

<!-- Show errors prominently so the user knows the call did not succeed. -->

<div class="error">{{ error_message }}</div>

{% endif %}

{% if generated_text %}

<!-- Display the generated output from the Perplexity API. -->

<section>

<h2>Generated Text</h2>

<pre>{{ generated_text }}</pre>

</section>

{% endif %}

{% if perplexity_score is not none %}

<!-- Provide the perplexity score when available to convey confidence. -->

<p class="meta"><strong>Perplexity Score:</strong> {{ "%.3f"|format(perplexity_score) }}</p>

{% endif %}

<a href="{{ url_for('index') }}">← Back to Research Form</a>

</main>

</body>

</html>

Explanation of Changes:

- Model Selection:

In the HTML form, users can choose from five Sonar models:sonar-pro,sonar,sonar-deep-research,sonar-reasoning-pro, andsonar-reasoning. The selected model is captured viarequest.form['model']. - API Request:

The selected model is then sent along with the user’s query to the Perplexity API, which generates the research insights. The API response is processed and displayed to the user.

Step 3: Adding Robust Error Handling

In real-world applications, it’s important to handle errors gracefully to enhance the user experience. This includes handling network failures, invalid queries, and issues with the API.

1. Enhance the get_research_results Function

Let’s add error handling to ensure that any issues with the Perplexity API or network requests are caught and displayed to the user.

def get_research_results(query, model):

headers = {

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json"

}

data = {

"query": query,

"model": model

}

try:

response = requests.post(PERPLEXITY_API_URL, json=data, headers=headers)

# Check for API failure

if response.status_code != 200:

return {"error": f"Failed to fetch data from Perplexity API. Status code: {response.status_code}"}

# Check for missing or empty response data

response_data = response.json()

if 'text' not in response_data or not response_data['text']:

return {"error": "No content generated. The query might be invalid or too broad."}

return response_data

except requests.exceptions.RequestException as e:

# Handle network errors or any issues with the request

return {"error": f"An error occurred while making the request: {str(e)}"}

2. Handle Errors in the Flask Route

We’ll update the Flask route to handle any errors returned by the get_research_results function and display them appropriately.

@app.route('/research', methods=['POST'])

def research():

query = request.form['query']

model = request.form['model'] # Get the selected model from the form

# Get research results from Perplexity API

results = get_research_results(query, model)

if 'error' in results:

# If there was an error, display the error message

generated_text = results['error']

perplexity_score = "N/A"

else:

# If no error, display the generated content and perplexity score

generated_text = results.get("text", "No content generated.")

perplexity_score = results.get("perplexity", "N/A")

return render_template('results.html', query=query, generated_text=generated_text, perplexity_score=perplexity_score)

This ensures that if there is any failure, such as an invalid model selection, network issue, or no content generated, the user will see a friendly error message instead of the application crashing.

Step 4: Running the Application

You can now run the Mini Research Assistant web application. To start the app, use the following command:

flask run

Once the application is running, you can access it locally at http://127.0.0.1:5000/ and begin using it to generate research insights.

Research Insights Generated with Sonar Models: A Snapshot

Here’s a screenshot of the Mini Research Assistant in action, where users can input their queries and select from different Sonar models. The results, including AI-generated research content and the perplexity score, are displayed on the results page. This showcases how the Mini Research Assistant with Flask and Perplexity API helps streamline research by providing relevant insights tailored to your selected model.

Conclusion: Building a Powerful Research Assistant with Flask and Perplexity API

In this guide, we’ve developed a Mini Research Assistant using Flask and the Perplexity API. This powerful web application allows users to:

- Select from a variety of Sonar models (e.g., sonar-pro, sonar, sonar-deep-research, sonar-reasoning-pro, and sonar-reasoning) for tailored research insights.

- Generate AI-driven research content based on user queries, helping to streamline the research process.

- Evaluate the quality of generated content with perplexity scores, offering deeper insights into the reliability of the results.

- Handle errors gracefully, providing a seamless experience even when issues arise.

This AI-powered research assistant can be expanded with new features, including saving research queries, adding more advanced models, and enhancing the user interface for a better experience. Feel free to build upon this project to create an even more robust tool for automated research assistance!n!

🎞️ Posting demos or campaign clips? Use compress mp4 to reduce size while preserving motion clarity and readable UI text; set goals, preview output, and export in minutes for smoother playback and quicker uploads across platforms.