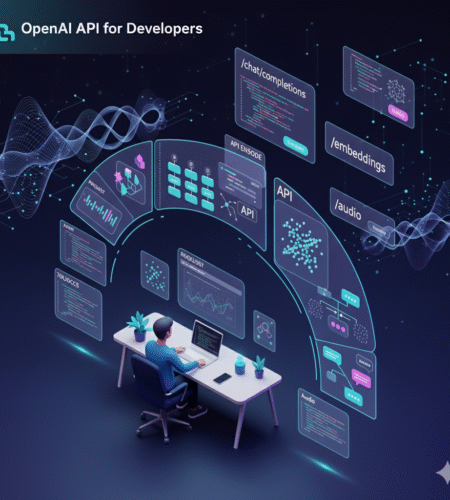

The OpenAI API is transforming how software developers build applications. From natural language understanding and chatbots to semantic search, image generation, and speech processing, OpenAI provides a set of powerful APIs that make AI integration accessible.

This guide is designed as a complete reference for developers — covering everything from setup and key features to advanced use cases, pricing, security, and best practices.

By the end, you’ll know how to build and scale AI-powered applications using the OpenAI API with confidence.

Table of Contents

- What is the OpenAI API?

- How the OpenAI API Works (Architecture Overview)

- Getting Started: Setup & First Call

- OpenAI API Features

- Chat & Text Generation (GPT models)

- Embeddings & Semantic Search

- Image Generation & Editing (DALL·E)

- Audio APIs (Whisper & Text-to-Speech)

- Fine-tuning Models

- Pricing & Tokenization Explained

- Best Practices for Developers

- Advanced Development Patterns

- Example Projects with OpenAI API

- Security & Compliance Considerations

- Alternatives & When to Use Them

- Future of the OpenAI API

- Conclusion & Next Steps

1. What is the OpenAI API?

The OpenAI API is a cloud-based service that lets developers integrate advanced AI models into their applications with simple API calls.

It provides access to:

- GPT models → for text and chat generation.

- Embeddings → for semantic search, recommendations, classification.

- DALL·E → for generating and editing images.

- Whisper → for speech-to-text transcription and translation.

- Text-to-Speech (TTS) → for generating lifelike voices.

In short: if your app needs natural language, images, or audio, the OpenAI API makes it possible.

2. How the OpenAI API Works (Architecture Overview)

At a high level:

- Your app sends a request (JSON payload) to the OpenAI API endpoint.

- The payload contains your API key, model name, and inputs (prompt, messages, or file).

- The OpenAI API processes your request with the chosen model.

- You receive a structured response (text, JSON, embedding vector, or media).

Common API endpoints:

/chat/completions→ conversational AI./embeddings→ semantic vectors./images→ generate/edit images./audio/transcriptions→ convert audio to text./audio/speech→ convert text to audio.

3. Getting Started: Setup & First Call

Step 1. Get an API Key

- Create an account at platform.openai.com.

- Generate an API key under View API Keys.

- Store securely:

export OPENAI_API_KEY="your_api_key_here"

Step 2. Install SDKs

Python:

pip install openai

Node.js:

npm install openai

Step 3. Make Your First Chat Completion

Python:

from openai import OpenAI

client = OpenAI()

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[

{"role": "system", "content": "You are a helpful coding assistant."},

{"role": "user", "content": "Write a Python function for Fibonacci."}

]

)

print(response.choices[0].message.content)

4. OpenAI API Features

A. Chat & Text Generation (GPT Models)

- Models: GPT-4o, GPT-4o mini, GPT-3.5 turbo.

- Use Cases: Chatbots, summarization, Q&A, content generation, coding tools.

- Key Features:

- Role-based messaging (system, user, assistant).

- Function calling (invoke external tools/APIs).

- JSON mode (structured outputs).

- Temperature/top_p settings for control.

Example (structured JSON output):

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[{"role": "user", "content": "Give me a todo in JSON"}],

response_format={"type": "json_object"}

)

B. Embeddings & Semantic Search

- Convert text into vector embeddings.

- Enables semantic search, clustering, classification, and RAG (Retrieval-Augmented Generation).

Example:

embedding = client.embeddings.create(

model="text-embedding-3-small",

input="OpenAI API guide"

)

vector = embedding.data[0].embedding

C. Image Generation & Editing (DALL·E)

- Generate: Create images from text prompts.

- Edit: Apply masks to modify existing images.

- Use Cases: UI prototypes, marketing visuals, design automation.

Example:

image = client.images.generate(

model="gpt-image-1",

prompt="A futuristic city skyline at sunset"

)

D. Audio APIs (Whisper & TTS)

- Whisper: Transcribe audio to text.

- TTS: Convert text to natural-sounding speech.

Example (Whisper transcription):

audio_file= open("speech.mp3", "rb")

transcript = client.audio.transcriptions.create(

model="whisper-1",

file=audio_file

)

E. Fine-Tuning Models

- Fine-tune GPT-3.5 with your dataset.

- Useful for domain-specific knowledge (legal, medical, support).

- Steps:

- Collect & clean training data.

- Upload to OpenAI.

- Fine-tune via CLI.

5. Pricing & Tokenization Explained

The OpenAI API is billed per token.

- 1 token ≈ 4 characters in English text.

- Both input (prompt) + output (completion) are counted.

Tools:

tiktoken→ calculate tokens.- Strategy:

- Use cheaper models (

gpt-4o-mini) where possible. - Cache common responses.

- Keep prompts concise.

- Use cheaper models (

6. Best Practices for Developers

- Secure your API key → use env vars or secret managers.

- Handle rate limits → implement retries + exponential backoff.

- Use system prompts wisely → guide behavior of GPT.

- Monitor usage → OpenAI dashboard & logging.

- Cache embeddings & responses → reduce cost & latency.

7. Advanced Development Patterns

- Function Calling → connect GPT with external APIs/tools.

- RAG (Retrieval-Augmented Generation) → combine GPT with vector search.

- Agent Frameworks → LangChain, LlamaIndex, Semantic Kernel.

- Multimodal Apps → text + audio + image pipelines.

8. Example Projects with OpenAI API

- Customer support chatbot with live FAQ retrieval.

- Semantic search for developer docs.

- AI-powered Slack bot for team productivity.

- Automated code reviewer with GPT-4o.

- AI-generated blog images via DALL·E.

9. Security & Compliance Considerations

- Never expose API keys in frontend.

- Use role-based access in production.

- Sanitize user input (prevent prompt injection).

- Follow GDPR/PII handling if processing sensitive data.

10. Alternatives & When to Use Them

- Anthropic Claude API → long context, safe outputs.

- Cohere → embeddings, fast LLMs.

- Google Gemini API → strong multimodal capabilities.

OpenAI remains most versatile across text, images, and audio.

11. Future of the OpenAI API

Expect:

- Real-time multimodal interactions (text + audio + video).

- More fine-tuning options for custom apps.

- Cheaper, faster models for production.

- Tighter integrations with dev frameworks.

12. Conclusion

The OpenAI API is the single most powerful API suite for developers today. It allows you to:

- Build chatbots, search engines, and AI assistants.

- Generate and edit images.

- Process speech-to-text and text-to-speech.

- Scale with fine-tuned models.

Whether you’re prototyping or scaling to millions of users, the OpenAI API gives you the flexibility and tools you need.

Comments