Understanding the Token Economy in Modern AI Applications

If you’re building applications that interact with Large Language Models (LLMs), you’ve probably noticed something important: every character counts. When you send data to AI models like Claude, GPT, or Gemini, you’re charged by tokens—and those costs add up quickly. This is where TOON (Token-Oriented Object Notation) comes into play, offering a smarter way to format your data for AI interactions.

What is JSON?

JSON (JavaScript Object Notation) has been the standard for data exchange since the early 2000s. It’s human-readable, universally supported, and perfect for APIs and web applications. However, JSON wasn’t designed with token efficiency in mind. Every brace, bracket, comma, and repeated key consumes tokens—and in the world of LLMs, tokens mean money.

Here’s a typical JSON example:

{

"users": [

{ "id": 1, "name": "Alice", "role": "admin", "salary": 75000 },

{ "id": 2, "name": "Bob", "role": "user", "salary": 65000 },

{ "id": 3, "name": "Charlie", "role": "user", "salary": 70000 }

]

}

This small snippet uses 257 tokens. For a single request, it might not seem like much. But when you’re making hundreds or thousands of API calls daily, this verbosity becomes expensive.

What is TOON?

TOON (Token-Oriented Object Notation) is a compact, human-readable serialization format specifically designed for LLM interactions. It combines the best features of YAML’s indentation-based structure with CSV’s tabular efficiency, while eliminating redundant punctuation found in JSON.

The same data in TOON format:

users[3]{id,name,role,salary}:

1,Alice,admin,75000

2,Bob,user,65000

3,Charlie,user,70000

This uses only 166 tokens—a 35% reduction. For larger datasets, the savings can reach 30-60%, translating to significant cost reductions over time.

JSON vs TOON: Key Differences Explained

1. Syntax and Structure

JSON uses verbose syntax with:

- Curly braces

{}for objects - Square brackets

[]for arrays - Repeated keys for every object in an array

- Quotes around all keys and string values

TOON uses minimal syntax:

- Indentation for nested objects (like YAML)

- Array declarations with length:

users[3] - Field headers declared once:

{id,name,role,salary} - Minimal quoting (only when necessary)

2. Token Efficiency

The token savings become dramatic with larger datasets. Consider 100 GitHub repositories with 8-10 fields each:

- JSON: ~15,145 tokens

- TOON: ~8,745 tokens

- Savings: 42.3% (6,400 tokens)

At typical LLM pricing ($0.03 per 1K tokens), this saves approximately $0.19 per request. For applications making 1,000 such calls monthly, that’s $190 in savings—just from one type of request.

3. Readability

JSON is familiar to most developers:

{

"user": {

"id": 123,

"name": "Ada",

"created": "2025-01-15T10:30:00Z"

}

}

TOON is clean and intuitive:

user:

id: 123

name: Ada

created: 2025-01-15T10:30:00Z

Both formats are human-readable, but TOON removes visual clutter, making it easier to scan large datasets.

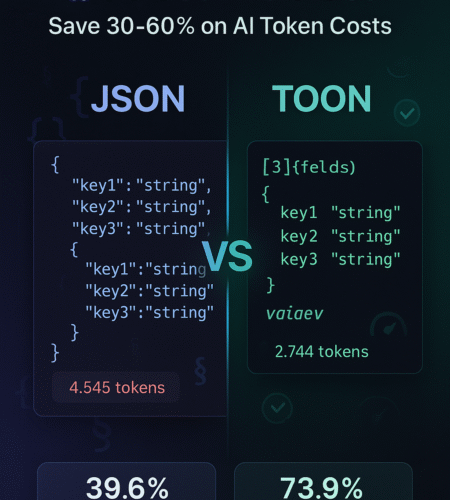

4. LLM Comprehension

According to benchmarks, TOON doesn’t just save tokens—it also improves LLM accuracy. Tests across four different AI models showed:

- TOON accuracy: 73.9%

- JSON accuracy: 69.7%

- Token usage: TOON uses 39.6% fewer tokens than JSON

The explicit structure (array lengths and field headers) helps LLMs validate and parse data more reliably.

JSON vs TOON Examples: Side-by-Side Comparison

Example 1: Simple Object

JSON:

{

"id": 123,

"name": "Ada",

"active": true

}

TOON:

id: 123

name: Ada

active: true

Example 2: Nested Object

JSON:

{

"user": {

"id": 123,

"profile": {

"name": "Ada",

"email": "ada@example.com"

}

}

}

TOON:

user:

id: 123

profile:

name: Ada

email: ada@example.com

Example 3: Array of Objects (The Sweet Spot)

JSON:

{

"repositories": [

{

"id": 28457823,

"name": "freeCodeCamp",

"stars": 430886,

"forks": 42146

},

{

"id": 132750724,

"name": "build-your-own-x",

"stars": 250000,

"forks": 15000

}

]

}

TOON:

repositories[2]{id,name,stars,forks}:

28457823,freeCodeCamp,430886,42146

132750724,build-your-own-x,250000,15000

This is where TOON shines—uniform arrays of objects with identical fields. The more rows you have, the greater the savings.

JSON vs TOON in Python: Practical Implementation

Let’s see how to work with both formats in Python.

Working with JSON in Python

import json

# JSON data

data = {

"users": [

{"id": 1, "name": "Alice", "role": "admin"},

{"id": 2, "name": "Bob", "role": "user"}

]

}

# Encode to JSON string

json_string = json.dumps(data, indent=2)

print(json_string)

# Decode from JSON string

decoded_data = json.loads(json_string)

Working with TOON in Python

First, install the TOON package:

pip install toon-format

Then use it in your code:

from toon_format import encode, decode

# Python dictionary

data = {

"users": [

{"id": 1, "name": "Alice", "role": "admin"},

{"id": 2, "name": "Bob", "role": "user"}

]

}

# Encode to TOON string

toon_string = encode(data)

print(toon_string)

# Output:

# users[2]{id,name,role}:

# 1,Alice,admin

# 2,Bob,user

# Decode from TOON string

decoded_data = decode(toon_string)

print(decoded_data) # Back to Python dictionary

Hybrid Approach: Best of Both Worlds

The recommended approach is to use JSON in your application code and convert to TOON only when sending data to LLMs:

import json

from toon_format import encode

# Store data as JSON (familiar, well-supported)

json_data = json.dumps(data)

# Convert to TOON when calling LLM APIs

toon_data = encode(data)

# Send toon_data to your LLM

response = llm_api.call(prompt=f"Analyze this data:\n{toon_data}")

When to Use JSON vs TOON

Use JSON When:

- Building REST APIs or web services

- Working with configuration files

- Storing data in databases

- Sharing data across different systems

- Working with deeply nested or non-uniform structures

- Your data has mixed types or varying field sets

Use TOON When:

- Sending data to LLM APIs (Claude, GPT, Gemini)

- Working with large, uniform datasets

- Processing tabular data for AI analysis

- You need to reduce token costs significantly

- Building AI agents or RAG systems

- Generating structured data with AI models

The TOON Sweet Spot

TOON excels with:

- Uniform arrays of objects: Same fields across all items, primitive values only

- Large datasets: The more rows, the greater the savings

- Repetitive structures: Analytics data, user records, transaction logs

TOON is less efficient for:

- Deeply nested structures: JSON may be more compact

- Non-uniform data: Objects with different field sets

- Small datasets: Minimal savings on tiny objects

- Pure tabular data: CSV is even more compact (but less structured)

TOON Format: Understanding the Syntax

Basic Rules

- Indentation: 2 spaces per level (like YAML)

- Objects: Key-value pairs with colons

- Arrays: Length declared in brackets

[N] - Tabular arrays: Fields declared once

{field1,field2} - Minimal quoting: Only when necessary

Quoting Rules

Strings need quotes only when they:

- Are empty:

"" - Have leading/trailing spaces:

" padded " - Contain delimiters, colons, or special characters:

"hello, world" - Look like booleans or numbers:

"true","42" - Start with “- ” (list-like):

"- item"

Special Cases

Empty arrays and objects:

items[0]:

config:

Nested arrays:

pairs[2]:

- [2]: 1,2

- [2]: 3,4

Mixed arrays (non-uniform):

items[3]:

- 1

- a: 1

- text

Real-World Performance Benchmarks

Comprehensive benchmarks across 209 questions on 4 LLM models show:

Overall Performance (Accuracy × Token Efficiency)

| Format | Score | Accuracy | Tokens |

|---|---|---|---|

| TOON | 26.9 | 73.9% | 2,744 |

| JSON compact | 22.9 | 70.7% | 3,081 |

| YAML | 18.6 | 69.0% | 3,719 |

| JSON | 15.3 | 69.7% | 4,545 |

| XML | 13.0 | 67.1% | 5,167 |

Token Savings by Dataset Type

Analytics Data (60 rows, uniform):

- JSON: 22,250 tokens

- TOON: 9,120 tokens

- Savings: 59.0%

GitHub Repositories (100 rows, uniform):

- JSON: 15,145 tokens

- TOON: 8,745 tokens

- Savings: 42.3%

Employee Records (100 rows, uniform):

- JSON: 126,860 tokens

- TOON: 49,831 tokens

- Savings: 60.7%

Advanced TOON Features

Alternative Delimiters

TOON supports different delimiters for even better token efficiency:

Tab-separated (often most efficient):

toon_string = encode(data, delimiter='\t')

Pipe-separated (good middle ground):

toon_string = encode(data, delimiter='|')

Key Folding

Collapse nested single-key chains to save more tokens:

# Standard nesting

data = {

"data": {

"metadata": {

"items": ["a", "b"]

}

}

}

# With key folding

toon_string = encode(data, key_folding='safe')

# Output: data.metadata.items[2]: a,b

Path Expansion

Reconstruct folded keys back to nested objects:

decoded = decode(toon_string, expand_paths='safe')

# Restores original nested structure

Converting Between JSON and TOON

Using the CLI Tool

TOON provides a command-line tool for quick conversions:

# Install globally

npm install -g @toon-format/cli

# Convert JSON to TOON

toon-cli input.json -o output.toon

# Show token savings

toon-cli input.json --stats

# Pipe from stdin

cat data.json | toon-cli

# Convert TOON back to JSON

toon-cli data.toon -o output.json

Using JavaScript/TypeScript

import { encode, decode } from '@toon-format/toon';

const data = {

users: [

{ id: 1, name: 'Alice', role: 'admin' },

{ id: 2, name: 'Bob', role: 'user' }

]

};

// Encode to TOON

const toonString = encode(data);

// Decode back to JavaScript

const jsonData = decode(toonString);

Using Python

from toon_format import encode, decode

# Your data

data = {"users": [...]}

# Encode to TOON

toon_str = encode(data)

# Decode back to Python dict

original = decode(toon_str)

Best Practices for Using TOON

1. Use TOON as a Translation Layer

Keep JSON in your codebase and convert to TOON only when sending to LLMs:

# Store and process as JSON

user_data = json.loads(api_response)

# Convert to TOON for LLM

toon_data = encode(user_data)

prompt = f"Analyze this data:\n```toon\n{toon_data}\n```"

2. Guide the LLM with Format Examples

When using TOON in prompts, show the format instead of describing it:

Data is in TOON format (2-space indent, arrays show length and fields).

```toon

users[3]{id,name,role}:

1,Alice,admin

2,Bob,user

3,Charlie,user

Task: Return only users with role “admin” as TOON.

### 3. Choose the Right Delimiter

For optimal token efficiency:

- **Tab delimiters**: Best for most tabular data

- **Comma delimiters**: Good default, familiar

- **Pipe delimiters**: Good when data contains commas

### 4. Monitor Your Savings

Use the `--stats` flag to track token reductions:

```bash

toon-cli data.json --stats

5. Handle Errors Gracefully

Always wrap TOON operations in try-catch blocks:

try:

toon_data = encode(user_data)

except Exception as e:

print(f"Encoding error: {e}")

# Fallback to JSON if needed

Common Use Cases for TOON

1. AI Data Analysis

# Analyzing large datasets with LLMs

analytics_data = get_analytics(days=60)

toon_data = encode(analytics_data)

prompt = f"""

Analyze this analytics data and identify trends:

```toon

{toon_data}

What patterns do you see? “””

### 2. RAG Systems

```python

# Retrieval-Augmented Generation with TOON

documents = search_knowledge_base(query)

toon_docs = encode(documents)

context = f"Relevant documents:\n```toon\n{toon_docs}\n```"

3. Agent Frameworks

# AI agents processing structured data

tasks = [

{"id": 1, "title": "Review PR", "priority": "high"},

{"id": 2, "title": "Write tests", "priority": "medium"}

]

agent_input = encode(tasks)

4. Fine-Tuning Training Data

Using TOON for training data reduces token overhead:

# Training examples in TOON format

training_data = [

{"input": encode(example["data"]), "output": example["label"]}

for example in dataset

]

Limitations and Considerations

When TOON Isn’t the Best Choice

- Small datasets: Token savings are minimal on tiny objects

- Non-uniform data: Objects with different fields lose TOON’s advantages

- Deep nesting: JSON can be more compact for highly nested structures

- Legacy systems: JSON’s universal support makes it better for interoperability

Technical Limitations

- TOON is primarily designed for LLM input, not general-purpose APIs

- Encoding/decoding can be slightly slower than optimized JSON parsers (though negligible compared to LLM inference time)

- Not all languages have mature TOON libraries yet

- Requires developer education for teams unfamiliar with the format

Token Count Variations

Token counts vary by model and tokenizer:

- Benchmarks use GPT-style tokenizers (cl100k/o200k)

- Actual savings may differ with other models (e.g., SentencePiece)

- Always test with your specific LLM and tokenizer

The Future of TOON

TOON is rapidly gaining traction in the AI developer community. As of late 2024:

- Growing ecosystem: Implementations in 20+ programming languages

- Production-ready: Used in real-world applications

- Active development: Regular improvements and optimizations

- Community-driven: Open-source with contributions welcome

The format is particularly promising as:

- LLM context windows grow larger

- Token costs remain significant

- AI applications become more data-intensive

- Multi-agent systems become mainstream

Conclusion: JSON vs TOON – Making the Right Choice

Both JSON and TOON have their place in modern development:

JSON remains essential for:

- Web APIs and microservices

- Configuration files

- Data storage and transmission

- Universal interoperability

- Complex, non-uniform data structures

TOON is optimal for:

- LLM API interactions

- Large, uniform datasets

- Cost-sensitive AI applications

- Agent frameworks and RAG systems

- Structured AI output generation

The best approach? Use both strategically. Keep JSON as your primary format for application logic, and convert to TOON when interfacing with LLMs. This hybrid strategy gives you the familiarity and ecosystem support of JSON with the token efficiency of TOON where it matters most.

As AI applications continue to scale, token efficiency becomes increasingly important. TOON offers a practical, proven solution that can reduce your LLM costs by 30-60% while actually improving model comprehension. For developers building AI-powered applications, TOON is a tool worth adding to your toolkit.

Getting Started with TOON

Ready to try TOON? Here’s your quick-start checklist:

- Explore the format: Visit the official TOON repository

- Try the playground: Use online converters to see token savings

- Install the library: Choose your language (Python, JavaScript, etc.)

- Start small: Convert one API call to TOON and measure results

- Scale up: Apply to high-volume LLM interactions

- Monitor savings: Track token reduction and cost impact

The future of AI data serialization is here—and it’s more efficient than ever. Give TOON a try and see how much you can save.

Have you tried TOON in your projects? What token savings have you seen? Share your experience in the comments below!

Comments