Section 1: Introduction to Tavily

1.1 What is Tavily?

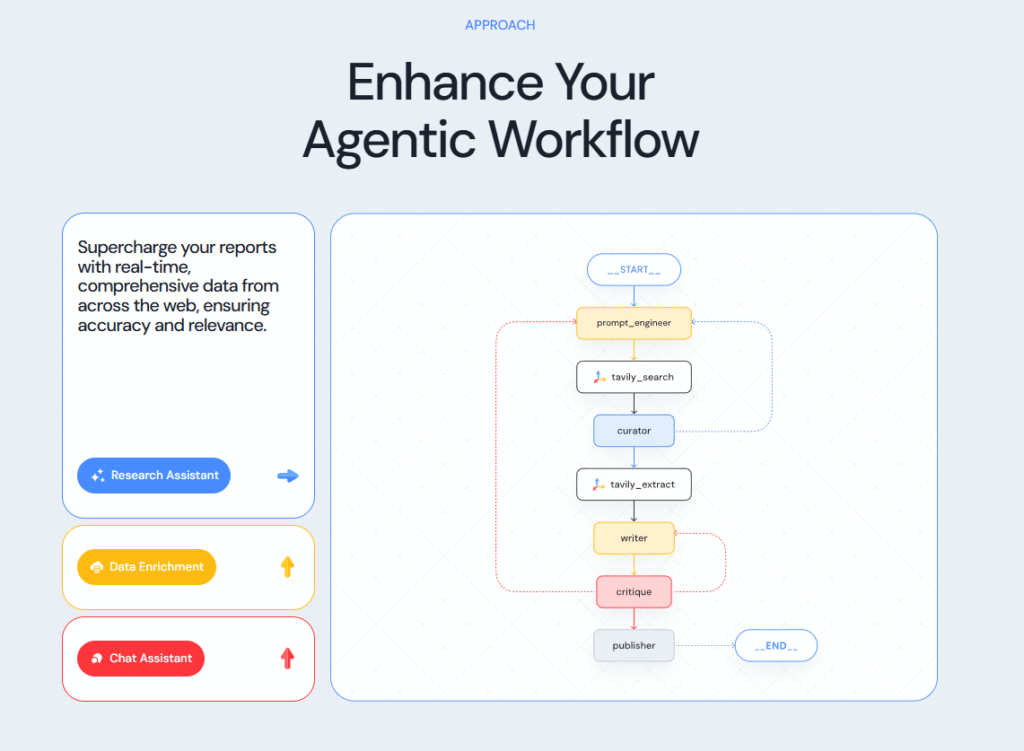

Tavily is a cutting-edge AI web search API designed to help developers integrate real-time, web-based data into their applications, particularly for large language models (LLMs) and AI agents. The service enables users to search, crawl, and extract real-time data directly from the web, providing AI-driven systems with access to the most current and relevant information.

Unlike traditional search engines or static datasets, Tavily is designed to facilitate the dynamic retrieval of information, empowering developers to build data-driven AI applications that can interact with the ever-evolving web. Whether you’re building an AI-powered chatbot, a real-time information retrieval system, or any other intelligent application, Tavily gives you the tools to tap into live, up-to-date content.

Tavily’s real-time web data extraction capabilities make it stand out, providing clean, precise, and contextually relevant information that can be fed into your systems with minimal effort.

1.2 The Importance of Real-Time Web Access for AI Agents

In today’s fast-paced world, AI agents and chatbots need access to real-time information to remain relevant and effective. Without access to live data, these agents are confined to outdated knowledge, potentially offering inaccurate or irrelevant responses.

Real-time web access opens up a world of possibilities for AI agents. For example:

- Customer support bots can retrieve the latest product information and FAQs to provide precise answers.

- Research agents can scan the web for the most recent academic papers, news articles, or market reports, ensuring they offer the most current insights.

- Recommendation engines can pull the latest reviews or user-generated content, making recommendations based on current trends.

Tavily’s ability to offer dynamic, real-time web crawling makes it an essential tool for developing advanced AI systems. It reduces the need for manual data updating, helping developers focus on building innovative AI applications.

1.3 Why Choose Tavily for Your AI and Web Scraping Needs?

Tavily stands out in the crowded world of web scraping and AI data access APIs for several reasons:

- Ease of Use: Tavily’s API is designed to be developer-friendly, offering intuitive documentation, clear setup instructions, and quick integration. Whether you’re working with Python or Laravel, Tavily simplifies the process of incorporating real-time web data into your applications.

- Scalable and Flexible: Tavily’s infrastructure is built to handle a wide variety of workloads, from small-scale projects to large enterprise solutions. The flexible pricing plans cater to different usage needs, ensuring that startups, researchers, and large organizations alike can take advantage of Tavily’s capabilities.

- Accuracy and Transparency: One of Tavily’s key features is its ability to deliver accurate and clean data from the web. Every result includes source citations, ensuring that your AI agents provide trustworthy and verifiable answers. Whether you’re pulling search results or extracting specific data from websites, Tavily ensures that every piece of information is traceable back to its original source.

- Integration with Existing Tools: Tavily easily integrates with popular programming languages and frameworks, such as Python and Laravel, making it simple to incorporate into your existing tech stack. This compatibility is key for developers who need a plug-and-play solution.

- Support for Advanced Use Cases: Tavily supports more than just search. Its powerful web scraping and data extraction capabilities allow you to go beyond basic search results. You can retrieve structured data, analyze web content, and perform detailed extraction tailored to your application’s needs.

By offering real-time web data extraction and enabling developers to integrate these capabilities into their AI agents, Tavily unlocks a wealth of opportunities for building intelligent, up-to-date, and responsive systems.

Key Takeaways for This Section:

- Tavily is an API designed to provide real-time access to web data, making it ideal for AI agents, chatbots, and research applications.

- Real-time data is critical for building relevant and effective AI systems, and Tavily provides a solution to gather this data dynamically from the web.

- Tavily stands out due to its ease of integration, scalability, and accuracy in delivering clean, source-attributed data.

Section 2: Key Features of Tavily

Tavily is packed with robust features that make it a powerful tool for developers seeking to integrate real-time web data extraction and AI-powered search capabilities into their applications. Below are some of the standout features that differentiate Tavily from other AI web search APIs.

2.1 Real-Time Search Capabilities

One of the most compelling features of Tavily is its real-time search functionality. Unlike traditional search engines, Tavily allows you to fetch the latest, most relevant web data directly from websites as you request it.

Key Benefits:

- Up-to-the-minute information: Tavily’s search API returns live data from the web, ensuring that your application always has access to the most current content, whether it’s news, product updates, or research papers.

- Optimized search: Tavily’s search algorithm is designed to return concise, contextually relevant snippets of information, allowing AI agents to understand and process the data efficiently.

- Tailored results: You can fine-tune search parameters such as time range, language, and location to ensure that the results are most relevant to your needs.

Example Use Case:

If you’re building an AI-powered news aggregator, Tavily can help fetch the latest headlines from news websites and present them in real-time, keeping your application updated with breaking news.

2.2 Web Crawling and Data Extraction

Tavily excels at web crawling and data extraction. These features make it easy to gather structured data from web pages and extract the exact information you need without relying on pre-defined datasets.

Key Benefits:

- Precise extraction: Tavily can extract specific elements from web pages, such as product details, user reviews, or academic references, making it ideal for e-commerce platforms or research-driven AI systems.

- Content parsing: The API supports HTML parsing, which allows you to retrieve detailed data from structured web pages, ensuring you capture the necessary content for your AI agents.

- Advanced filtering: You can filter the results based on various criteria, ensuring you only extract the most relevant information for your application.

Example Use Case:

For an AI-powered recommendation system, Tavily can crawl product pages, extract reviews, and gather pricing information to build dynamic product suggestions for users based on live data.

2.3 Plug-and-Play Integration

Tavily is designed with developers in mind, offering plug-and-play integration for various programming languages, including Python and Laravel. This means that you can quickly integrate Tavily into your existing tech stack without having to write extensive boilerplate code.

Key Benefits:

- Python SDK: Tavily provides an official Python SDK, allowing Python developers to integrate the API into their AI projects seamlessly. The SDK supports basic functionality such as search, extraction, and crawling, as well as advanced use cases like custom filtering and parameterization.

- Laravel SDK: Tavily’s Laravel integration makes it easy for PHP-based applications to interact with the API. Whether you’re building a Laravel-based web app or API, Tavily’s integration lets you leverage its powerful web search and extraction features with minimal setup.

- Quick setup: Tavily’s documentation provides clear and easy-to-follow instructions for getting started with the API in both Python and Laravel. Whether you’re a beginner or an experienced developer, the integration process is straightforward.

Example Use Case:

If you’re using Python to build an AI-based chatbot, Tavily’s Python SDK allows you to fetch real-time data to provide users with live responses based on the most recent information available on the web.

2.4 Source Citation and Transparency

Tavily understands the importance of transparency and trustworthiness when it comes to AI and data-driven systems. All results returned by the Tavily API come with source citations, ensuring that the data you use in your AI models is traceable back to the original source.

Key Benefits:

- Citations with every result: Every piece of data fetched from the web is accompanied by a source link or citation, making it easy to verify and trace the origin of the information.

- Enhanced trust: For AI systems, this level of transparency builds trust with users and ensures that the information shared by your agents is credible and reliable.

- Ethical AI: Providing source citations aligns with ethical AI practices, where the use of external data is fully acknowledged and attributed.

Example Use Case:

In an AI-powered research assistant, Tavily can pull the latest research papers, articles, or technical documentation and include citations with every response, allowing researchers to easily check the source and reliability of the information.

2.5 Scalability and Rate Limits

Tavily is designed to scale with your needs. Whether you’re working on a small project or developing a large enterprise-level system, Tavily provides flexible rate limits and scalability to handle any volume of web requests.

Key Benefits:

- Adjustable rate limits: Tavily offers customizable rate limits based on your usage, ensuring that you only pay for what you need while maintaining optimal performance. You can choose from a variety of pricing tiers to suit your project size and requirements.

- Enterprise readiness: Tavily’s infrastructure is capable of handling large volumes of data requests, making it a great option for companies looking to build enterprise-grade AI systems.

- Optimized performance: Tavily’s architecture is built to provide high-speed data retrieval with low latency, ensuring that real-time web access is fast and efficient.

Example Use Case:

For an enterprise-level AI system that relies on real-time web crawling and data extraction, Tavily can scale to accommodate the growing demands of your project, ensuring that all data is retrieved quickly and without interruption.

Key Takeaways for This Section:

- Tavily offers real-time search, web crawling, and data extraction capabilities, which can be used to enhance AI systems with up-to-date content.

- Its plug-and-play integration with Python and Laravel makes it an attractive choice for developers working within these frameworks.

- Tavily’s source citation feature enhances transparency and trust in the data used by AI systems.

- The platform is scalable and supports customizable rate limits, making it suitable for projects of any size, from small applications to enterprise solutions.

Section 3: How Tavily Works

In this section, we’ll break down the inner workings of Tavily—covering the architecture, API endpoints, and the core functionality that enables Tavily to provide real-time search and web data extraction. Understanding how Tavily works will help you leverage its full potential when integrating it into your AI-driven projects.

3.1 The Tavily API Architecture

Tavily operates through a simple yet powerful API architecture, designed for both ease of use and scalability. Its architecture ensures that developers can interact with web data through a unified interface, making integration seamless.

Key components of Tavily’s architecture include:

- API Gateway: The central entry point for all API requests. The gateway directs requests to the appropriate backend services, ensuring optimal response times.

- Data Crawlers: Tavily employs dedicated crawlers that extract data from various web sources in real-time. These crawlers can be configured to target specific websites, keywords, or data types, depending on your needs.

- Data Processors: Once the data is crawled, Tavily processes it to clean and structure it for easy consumption by your AI models. This step ensures that the data is accurate, well-formatted, and ready to be used.

- API Endpoints: Tavily provides several API endpoints to interact with different types of data retrieval processes, such as search, crawl, and extract. Each endpoint serves a specific function, allowing developers to access different types of data.

Example Use Case:

If you’re using Tavily to build an AI-powered research assistant, the search endpoint might be used to fetch the latest academic papers, while the extract endpoint could pull specific research data from the pages of a university’s website.

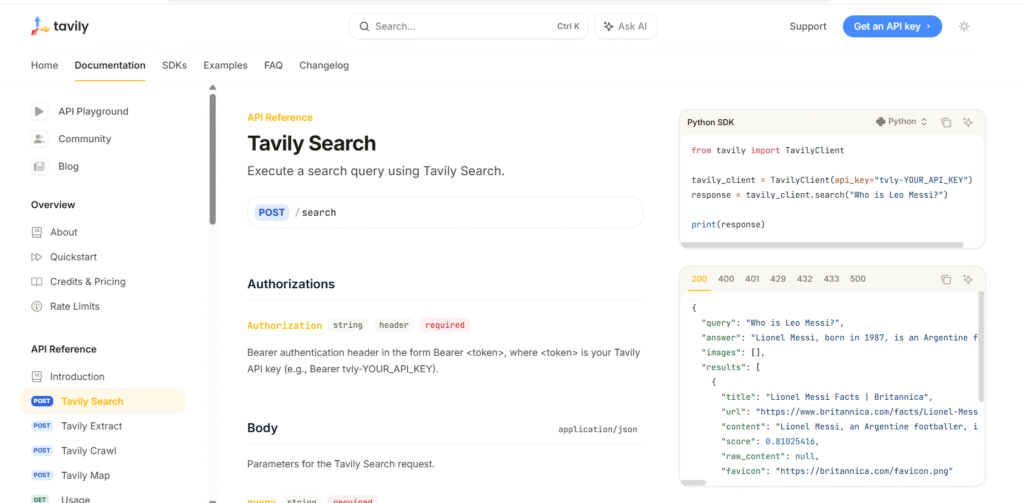

3.2 API Endpoints and Their Functions

Tavily’s API is structured around several key endpoints, each serving a specific purpose. These endpoints are designed to allow flexibility and fine control over the data you retrieve. Below is a breakdown of the core endpoints:

- Search Endpoint:

This endpoint allows you to search the web in real-time, fetching results based on the keywords, domains, or specific filters you provide. It returns concise data snippets that can be easily parsed and used within your AI models.- Example Use Case: Searching for product information on e-commerce websites.

- Extract Endpoint:

Tavily’s extract endpoint lets you pull specific elements from web pages. Whether it’s product details, reviews, or research papers, this endpoint can target and extract the precise content you need from structured web pages.- Example Use Case: Extracting product specifications and customer reviews from an e-commerce site for a recommendation engine.

- Crawl Endpoint:

The crawl endpoint allows you to navigate multiple pages on a website to gather content from a broader scope. This is useful for gathering large datasets or information from complex websites where a single search query won’t suffice.- Example Use Case: Crawling an entire academic website to pull a list of research papers or articles published over the past year.

- Metadata Endpoint:

Tavily also provides metadata endpoints that return information about the pages being crawled, such as page title, keywords, and author information. This can help AI agents determine the relevance and credibility of the data they retrieve.- Example Use Case: Evaluating whether a page contains trustworthy information based on its metadata.

Example Use Case:

Let’s say you’re building an AI chatbot for a travel website. The search endpoint could be used to pull real-time flight data, while the extract endpoint could help you retrieve detailed descriptions of hotels and reviews from various travel sites.

3.3 Integration with Existing LLMs (Large Language Models)

Tavily’s AI web search API is designed to complement existing LLMs (Large Language Models), such as OpenAI’s GPT series, by feeding them real-time, structured data. This enables LLMs to generate more accurate and context-aware responses.

How Tavily Integrates with LLMs:

- Tavily provides a bridge between static training data (the foundation of most LLMs) and dynamic web data, enhancing the accuracy and real-time relevance of LLM-based systems.

- Once the LLM retrieves data from Tavily’s real-time search, extraction, or crawling endpoints, it can use this information to refine its responses to user queries or improve its understanding of complex topics.

Example Use Case:

In a customer service application, an LLM-powered chatbot could use Tavily to retrieve up-to-date product availability or live shipping information from e-commerce sites and provide personalized, relevant answers based on real-time data.

3.4 Example Use Cases

Here are a few examples of how Tavily can be used in different types of applications:

- AI-Powered News Aggregator:

Tavily’s real-time search endpoint could be used to pull the latest headlines, articles, and news updates from various sources across the web. The gathered data could be filtered and presented as part of an intelligent news aggregation service. - Market Research AI:

For businesses in need of continuous market intelligence, Tavily’s crawling and extraction endpoints can help gather product prices, reviews, and competitor offerings from e-commerce platforms. This enables businesses to stay ahead of trends and adapt to changing market dynamics. - Real-Time Travel Assistant:

A travel app could use Tavily’s search endpoint to find up-to-date flight prices, hotel availability, and local attractions. With Tavily’s ability to extract specific data, travelers could receive highly relevant recommendations based on real-time pricing and availability. - E-commerce Product Recommender:

Using Tavily’s extract endpoint, an e-commerce site could gather product reviews and ratings from other websites, helping its recommendation engine suggest products based on live customer feedback and product performance.

Key Takeaways for This Section:

- Tavily’s API architecture is built around search, extract, and crawl endpoints, which provide real-time web access and data extraction.

- The search endpoint enables real-time data fetching from the web, while the extract endpoint allows you to pull specific data from structured pages.

- Tavily integrates seamlessly with LLMs to provide up-to-date data, improving the accuracy and contextual relevance of AI-driven systems.

- Tavily’s capabilities are ideal for market research, customer service applications, news aggregation, and more.

Section 4: Tavily’s Pricing Plans

Tavily offers a range of pricing options that cater to different needs, from individual developers to large enterprises. Understanding these pricing plans will help you choose the best option based on your usage, requirements, and scale of operations. Tavily’s transparent pricing ensures that you only pay for what you need, making it a flexible solution for various types of projects.

4.1 Free Plan: Features and Limitations

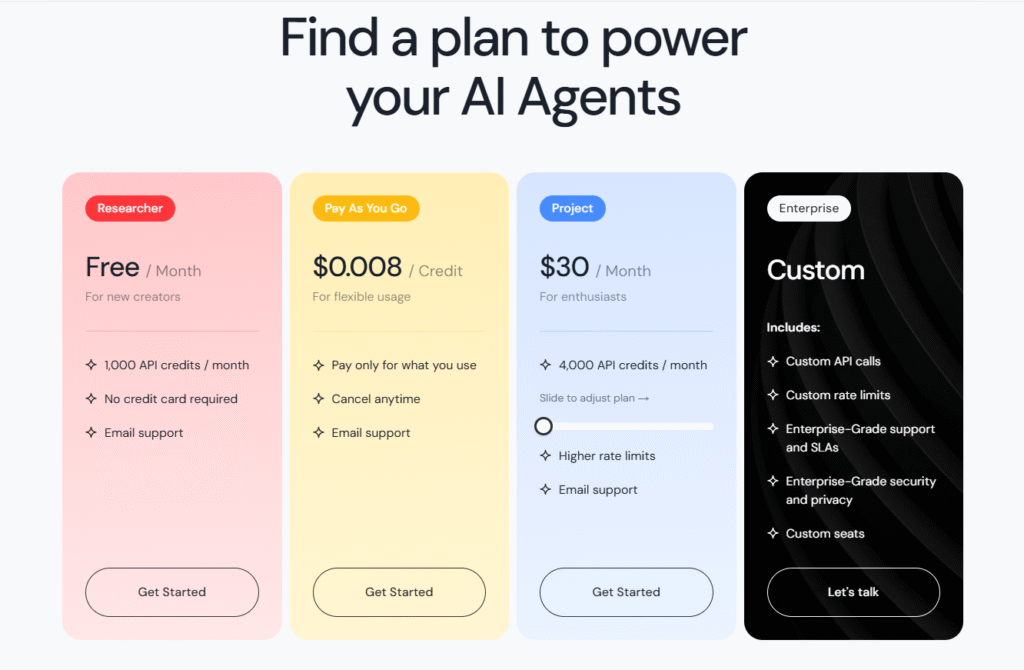

Tavily’s Free Plan is a great starting point for developers who are just beginning to explore the API or are working on small-scale projects. It allows you to test out Tavily’s core features without any financial commitment.

Key Features:

- 1,000 API credits per month: The Free Plan gives you access to 1,000 credits each month, which you can use for real-time searches, web crawling, and data extraction. This is ideal for small-scale projects or proof-of-concept applications.

- Access to core features: You’ll be able to use Tavily’s real-time search and data extraction features, though with some rate limits and restrictions.

- No credit card required: Signing up for the Free Plan does not require you to enter credit card details, making it accessible for developers who just want to try out Tavily.

Limitations:

- Lower rate limits: The Free Plan comes with lower rate limits than the paid plans, which means you may experience throttling or delays if you exceed the allocated credits.

- Limited support: Free users may not have access to the premium support channels, so you’ll need to rely on community resources for troubleshooting and guidance.

Example Use Case:

If you’re building a small AI-powered chatbot or personalized recommendation system, the Free Plan gives you a great starting point to integrate real-time search and data extraction capabilities without any upfront costs.

4.2 Pay-as-You-Go and Subscription Plans

For developers or businesses that need more flexibility and higher usage limits, Tavily offers Pay-as-You-Go and Subscription Plans. These plans are designed to provide you with additional features and higher credits, with the ability to scale based on your needs.

Pay-as-You-Go Plan:

- Customizable credits: You pay only for the credits you use, allowing for cost flexibility based on your needs. The more you use, the more you pay, but you can adjust your usage as necessary.

- Higher rate limits: This plan offers higher API rate limits, ensuring that you can make more requests without running into throttling issues.

- Ideal for scaling: If your project has variable usage needs or you’re working on a medium-sized application, this plan allows you to scale without committing to a fixed monthly fee.

Subscription Plans:

- Tavily offers a variety of subscription tiers to suit businesses of different sizes. These plans come with higher monthly credit allocations, reduced per-credit pricing, and additional features such as enhanced support, custom rate limits, and more.

- Basic Subscription: Provides a set number of credits per month at a fixed rate. This is great for smaller enterprises or growing startups with predictable data usage.

- Pro Subscription: Includes priority support, faster response times, and more credits to handle larger-scale projects. Suitable for businesses that rely on real-time data for critical operations.

- Enterprise Subscription: This plan is tailored for large enterprises with high-volume data needs. It offers dedicated support, customized pricing, and enhanced scalability for massive workloads. It also includes features like custom integration support and tailored rate limits.

Example Use Case:

A growing e-commerce platform might opt for the Pro Subscription to support their AI-powered product recommendation engine, ensuring that they can handle a larger volume of real-time data requests without hitting rate limits.

4.3 Enterprise-Level Solutions

For large businesses and organizations that need more advanced features, Tavily offers enterprise-level solutions that cater to complex, high-volume data requirements.

Key Features:

- Custom rate limits: Tailored rate limits ensure that enterprise customers can scale their usage according to the demands of their applications.

- Dedicated API support: Enterprise customers receive premium support from Tavily’s team, ensuring fast issue resolution and tailored solutions.

- Integration services: Tavily provides custom integration support, allowing enterprises to seamlessly integrate the API into their existing systems and workflows.

- Advanced security: Enterprise plans offer additional security measures, such as IP whitelisting and enhanced encryption, to ensure the safety and privacy of data.

Example Use Case:

A global logistics company might choose Tavily’s enterprise solution to integrate real-time data about global shipping routes, airfreight availability, and customs regulations into their AI-powered supply chain optimization tool.

4.4 Student and Researcher Discounts

Tavily understands the importance of supporting education and research. To help students and researchers access real-time data for academic projects, Tavily offers discounted plans for educational purposes.

Key Features:

- Free or discounted access: Students and researchers can receive free credits or discounted pricing to help with the costs of gathering web data for research purposes.

- Enhanced access to features: Educational plans come with access to advanced features like data extraction and crawling, allowing students and researchers to gather real-time data for experiments or papers.

Example Use Case:

A university research team might use Tavily’s discounted plan to collect real-time data from academic journals, government websites, and online databases for a study on global environmental change.

Key Takeaways for This Section:

- Tavily offers a Free Plan with 1,000 API credits per month, ideal for small-scale projects and experimentation.

- Pay-as-you-go and Subscription Plans provide flexible options for developers and businesses with varying usage needs.

- Enterprise-level solutions cater to large organizations with high-volume data requirements, offering custom rate limits and dedicated support.

- Student and researcher discounts make Tavily accessible for educational purposes, allowing students to access real-time data for research.

Section 5: Tavily’s Integration with Popular Tools

Tavily offers seamless integration with a variety of popular programming languages and frameworks, making it easy for developers to incorporate its real-time web search, data extraction, and crawling capabilities into their existing applications. In this section, we’ll focus on Tavily’s integration with Python and Laravel, two of the most widely-used development frameworks.

5.1 Python API Integration

Python is one of the most popular languages for building AI applications, and Tavily provides a well-documented Python SDK that makes integration fast and easy.

Key Features:

- Official Python SDK: Tavily provides a Python SDK that includes all the core features, including search, crawling, and data extraction. The SDK simplifies the process of interacting with the Tavily API by providing easy-to-use Python functions that encapsulate the complexity of making raw API calls.

- Easy Setup: Installing Tavily’s Python SDK is as simple as running a few commands in your terminal. With pip, you can easily install the necessary dependencies, set up your environment, and start using the API in just a few steps.

- Customization: The Python SDK allows you to fully customize your queries. You can specify parameters such as keywords, domains, time ranges, and even define custom search filters. This flexibility ensures you get exactly the data you need.

- Integration with AI Models: Python is commonly used in AI and machine learning applications, and Tavily’s integration works smoothly with popular machine learning frameworks like TensorFlow, PyTorch, and Hugging Face. You can use Tavily’s data as inputs to your models, feeding real-time information into your AI workflows.

Example Use Case:

If you’re building an AI-powered virtual assistant in Python, you could use Tavily’s Python SDK to pull real-time information from the web. For instance, your assistant could fetch the latest weather data, news updates, or product information and present it to the user on demand.

Quick Setup Example:

pip install tavily

import tavily

# Initialize Tavily client

client = tavily.Client(api_key="your_api_key")

# Perform a search request

results = client.search("latest AI news")

# Process and display results

for result in results:

print(result['title'], result['url'])

5.2 Laravel API Integration

For developers working within the Laravel framework, Tavily provides a simple integration path that ensures you can easily access real-time web data in your PHP-based applications.

Key Features:

- Laravel Package: Tavily offers a Laravel API package that wraps the Tavily API and provides an easy-to-use interface for making requests to the service. The package integrates directly into your Laravel application, allowing you to focus on building your app rather than managing API interactions.

- Seamless Setup: Installing the Tavily Laravel package is straightforward. With just a few commands, you can have Tavily up and running in your Laravel application, making it quick to integrate into your existing stack.

- RESTful API Calls: Tavily’s Laravel package abstracts the complexity of raw API calls. You can simply make RESTful requests to fetch data, such as real-time search results or web crawling data, and easily use that information within your Laravel-based application.

- Support for Scheduled Tasks: With Laravel’s powerful task scheduling feature, you can schedule regular API requests to pull real-time data from the web at specific intervals. This is particularly useful for applications that need to monitor and update data on an ongoing basis.

Example Use Case:

If you’re building a real-time product pricing engine in Laravel, you can use Tavily’s Laravel integration to scrape data from e-commerce sites and update product prices in your database regularly.

Quick Setup Example:

composer require tavily/tavily-laravel

use Tavily\Tavily;

public function fetchRealTimeData()

{

$client = new Tavily('your_api_key');

// Perform a search query

$results = $client->search('latest tech news');

foreach ($results as $result) {

echo $result->title . "\n";

}

}5.3 SDKs and Tools for Python and Node.js Developers

In addition to Python and Laravel, Tavily also provides SDKs and integration tools for Node.js developers. Each SDK offers tailored solutions that help developers build efficient, scalable applications using Tavily’s powerful API.

- Python SDK: Fully-featured for integrating with Python-based applications, it provides utility functions for various tasks like querying, parsing results, and processing data.

- Node.js SDK: A lightweight SDK that allows JavaScript developers to use Tavily with minimal setup. Node.js developers can access Tavily’s real-time search and data extraction capabilities with native JavaScript methods.

Example Use Case:

For a real-time customer support chatbot built in Node.js, you can use Tavily’s Node.js SDK to fetch live updates from product pages, retrieve pricing information, or gather FAQs from multiple sources and present it to users as part of the conversation.

Key Takeaways for This Section:

- Tavily offers a Python SDK that simplifies the process of integrating real-time web search, crawling, and data extraction into Python-based applications.

- The Laravel integration allows PHP developers to easily access Tavily’s powerful API within Laravel applications, including the ability to schedule regular data updates.

- Tavily also supports Node.js developers with a tailored SDK, ensuring compatibility across a range of development environments.

Section 6: Developer-Focused Insights

In this section, we’ll focus on the practical aspects of using Tavily in real-world applications. From setting up the API to optimizing performance, we’ll cover essential insights and best practices that will help you make the most of Tavily’s capabilities.

6.1 Setting Up Tavily’s API: Step-by-Step Guide

Getting started with Tavily is straightforward, whether you’re working with Python, Laravel, or Node.js. Below is a step-by-step guide for setting up Tavily’s API in a Python-based environment, but the process is similar for other languages.

Step 1: Sign up and Get Your API Key

- First, sign up on Tavily’s website and obtain your API key. This key is necessary to authenticate your requests.

- Navigate to the API section of the Tavily dashboard to find your API key.

Step 2: Install the Tavily SDK

- For Python, you can install the Tavily SDK via pip. Open your terminal or command prompt and run the following command:

pip install tavily

Step 3: Set Up Your Environment

- If you’re using Python, it’s good practice to create a virtual environment to isolate dependencies.

python3 -m venv tavily_env

source tavily_env/bin/activate # On Windows, use `tavily_env\Scripts\activate`

Step 4: Initialize the API Client

- With the SDK installed, initialize the Tavily client in your Python code. Use the API key you obtained earlier to authenticate.

import tavily

# Initialize the Tavily client with your API key

client = tavily.Client(api_key="your_api_key")

# Perform a search

results = client.search("latest tech news")

# Process and display the results

for result in results:

print(result['title'], result['url'])

Step 5: Make Your First Request

- With the setup complete, you can start making real-time web requests using the various endpoints like

search,extract, andcrawl.

Example: Fetching the latest tech news using the search endpoint.

results = client.search("latest tech news")

for result in results:

print(result['title'], result['url'])

6.2 Integrating Tavily into Your AI System

Once you’ve set up Tavily and made your first request, you can begin integrating it into your AI models or data-driven applications. Tavily can be particularly useful for AI systems that rely on real-time data to provide accurate, dynamic responses.

Example: AI-powered Chatbot Integration

Suppose you’re building an AI chatbot in Python that provides real-time answers to user queries. By integrating Tavily into the system, the chatbot can fetch live data whenever a user asks a question about current events, product information, or other dynamic topics.

- Search and Extract Real-Time Data: Use the

searchendpoint to find relevant articles or resources. - Process and Display Results: Parse the search results and display them to the user, ensuring the information is up-to-date.

- Contextual Responses: Combine the results with your chatbot’s logic to generate context-aware responses.

Example Code:

# Example function for retrieving real-time data for a chatbot

def get_live_data(query):

results = client.search(query)

if results:

return f"Here's the latest on {query}: {results[0]['title']} - {results[0]['url']}"

return "Sorry, I couldn't find anything relevant."

# Simulating a chatbot query

user_query = "latest smartphone reviews"

response = get_live_data(user_query)

print(response) # Output: Here's the latest on latest smartphone reviews: [Title] - [URL]

6.3 Best Practices for Optimizing Tavily’s Performance

To ensure you’re using Tavily efficiently and optimizing its capabilities, here are a few best practices:

1. Efficient Querying:

- When making search requests, try to refine your queries to get the most relevant results. Using specific keywords and filtering based on time range, location, and language can improve both the quality and speed of results.

2. Handle Rate Limits:

- Be mindful of rate limits depending on your subscription plan. To avoid hitting limits, consider batching requests or using caching for frequently requested data.

3. Use Pagination for Large Data:

- For large datasets, such as news articles or product listings, Tavily supports pagination. Make use of pagination to retrieve data in chunks rather than making one large request that might time out or be throttled.

4. Optimize Data Processing:

- Tavily provides structured data that can be easily consumed by your system. However, if you’re pulling a large number of results, consider using multi-threading or asynchronous processing to improve efficiency when handling large volumes of data.

5. Monitor API Usage:

- Regularly monitor your API usage through Tavily’s dashboard to ensure that you’re within the limits of your chosen plan. You can track your consumption of API credits and adjust your usage as needed to prevent unexpected throttling or extra charges.

6. Integrate Caching for Frequent Requests:

- If your application frequently queries similar data, consider integrating a caching system (e.g., Redis or Memcached) to store results temporarily. This helps reduce the number of API calls and speeds up response times.

Key Takeaways for This Section:

- Setting up Tavily is simple, whether you’re using Python, Laravel, or Node.js, and getting started with the API only takes a few minutes.

- Tavily can be integrated into AI systems to provide real-time, dynamic responses, enhancing the functionality of chatbots, virtual assistants, and other AI-powered applications.

- Best practices for optimizing Tavily’s performance include efficient querying, handling rate limits, using pagination, and leveraging caching for frequently accessed data.

Section 7: Real-World Applications and Case Studies

In this section, we’ll explore how Tavily is being used in different industries and real-world applications. Tavily’s ability to provide real-time web search, data extraction, and crawling capabilities makes it an excellent tool for a wide range of use cases, from AI-powered chatbots to market research and beyond.

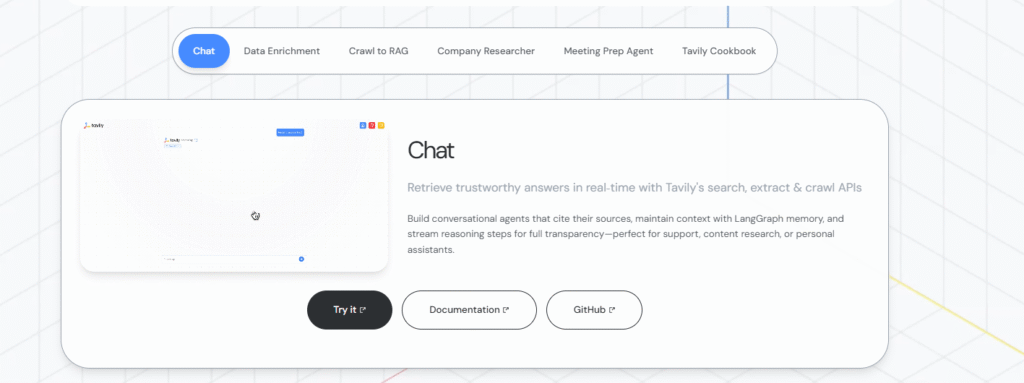

7.1 AI Chatbots Using Tavily

AI chatbots are becoming increasingly popular for customer support, sales, and user engagement. Tavily plays a crucial role in enhancing these chatbots by providing them with real-time data to answer user queries more effectively.

Use Case: Real-Time Customer Support Chatbot

A customer support chatbot can be integrated with Tavily to provide live responses to customer inquiries. By accessing Tavily’s real-time search and data extraction capabilities, the chatbot can:

- Retrieve the latest product information, availability, and specifications from e-commerce websites.

- Answer questions about current promotions, shipping times, and return policies by fetching up-to-date content from the company’s website.

- Pull information about new releases, customer reviews, or other dynamic content to assist users.

Example:

For an online retail store, Tavily’s search feature can be used to pull the most recent product availability or stock updates, which the chatbot can then use to answer customer questions about product availability in real time.

Example Code (for a chatbot integrated with Tavily):

def fetch_product_info(product_name):

results = client.search(f"{product_name} availability")

if results:

return f"The latest info on {product_name}: {results[0]['title']} - {results[0]['url']}"

return "I couldn't find the product details at the moment."

# Simulating a user asking for product info

user_query = "latest iPhone availability"

response = fetch_product_info(user_query)

print(response) # Output: The latest info on iPhone: [Product Info] - [URL]

7.2 Research Applications with Real-Time Data Access

Tavily is an invaluable tool for researchers who need access to up-to-date, reliable web data for their studies. By using Tavily to crawl academic articles, industry reports, or news updates, researchers can stay informed with the latest findings in their field.

Use Case: Academic Research

A research team studying environmental change could use Tavily to fetch the latest publications, research articles, or scientific reports related to their topic. Tavily can extract specific data from trusted sources such as journals, government websites, and university databases, making it easier to gather relevant and timely information.

Example:

In an environmental study, Tavily could help the research team retrieve the most recent studies on carbon emissions or pollution data. By accessing live data sources, the team can ensure their research is always based on the most up-to-date information.

Example Code (for academic research data retrieval):

def fetch_academic_articles(topic):

results = client.search(f"{topic} research papers")

if results:

return f"Recent articles on {topic}: {results[0]['title']} - {results[0]['url']}"

return "Sorry, no articles found at this time."

# Simulating a researcher asking for recent academic articles

user_query = "climate change impact research papers"

response = fetch_academic_articles(user_query)

print(response) # Output: Recent articles on climate change impact: [Title] - [URL]

7.3 How Tavily Powers Data-Driven Products

Tavily can be used to power data-driven products in various industries. Whether it’s e-commerce, financial services, or real-time content aggregation, Tavily’s ability to fetch and process live web data enables companies to build smarter, more responsive products.

Use Case: E-Commerce Product Recommendation Engine

In an e-commerce application, Tavily can be used to gather the most recent customer reviews, product specifications, and pricing from other sites, providing a comprehensive view of the product landscape. By integrating this real-time data into a recommendation engine, e-commerce businesses can offer better product suggestions based on current customer feedback and market trends.

Example:

An e-commerce platform could use Tavily to pull real-time customer ratings and reviews for products, enabling their recommendation engine to provide the most accurate product recommendations to users based on the most recent data.

Example Code (for product recommendation):

def fetch_product_reviews(product_name):

results = client.search(f"{product_name} customer reviews")

if results:

return f"Latest reviews for {product_name}: {results[0]['title']} - {results[0]['url']}"

return "I couldn't find customer reviews for this product."

# Simulating a user asking for product reviews

user_query = "Samsung Galaxy S21 customer reviews"

response = fetch_product_reviews(user_query)

print(response) # Output: Latest reviews for Samsung Galaxy S21: [Review Title] - [URL]

7.4 News Aggregation Services

Tavily can be used to build powerful news aggregation services, enabling users to access the latest headlines, articles, and breaking news from multiple sources in real time. By leveraging Tavily’s search and crawling features, you can create a news aggregator that pulls content from various publishers and presents it to users as it happens.

Use Case: Real-Time News Aggregator

A news aggregator can use Tavily to pull articles from various news sources such as blogs, news outlets, and social media platforms. This ensures users are always informed with the most current information, from breaking news to in-depth analysis.

Example:

A news aggregation website can use Tavily to pull the latest headlines related to a specific topic, such as global politics or technology news, and display them to users in a personalized feed.

Example Code (for a news aggregation service):

def fetch_latest_news(topic):

results = client.search(f"{topic} latest news")

if results:

return f"Latest news on {topic}: {results[0]['title']} - {results[0]['url']}"

return "Sorry, no news found at this time."

# Simulating a user asking for the latest tech news

user_query = "latest AI technology news"

response = fetch_latest_news(user_query)

print(response) # Output: Latest news on AI technology: [News Title] - [URL]

Key Takeaways for This Section:

- Tavily powers AI chatbots, research applications, and data-driven products by providing real-time, reliable web data.

- Customer support chatbots can use Tavily to provide up-to-date product information, pricing, and availability in real time.

- Researchers can leverage Tavily to pull the latest academic papers and articles, ensuring that their work is based on current information.

- E-commerce platforms can enhance their recommendation engines by using Tavily to gather live product reviews and data.

- News aggregation services can use Tavily to offer users real-time updates on breaking news and articles from multiple sources.

Section 8: Tavily vs. Competitors

In this section, we’ll compare Tavily with some of its main competitors in the AI web search and real-time data extraction space. By understanding how Tavily stacks up against other solutions, you’ll be able to make an informed decision on which tool best suits your project needs.

We will focus on a few well-known alternatives: Exa.ai, Linkup.so, and Serper. Each of these services offers web search and data extraction capabilities, but Tavily brings some unique advantages to the table.

8.1 Tavily vs. Exa.ai

Exa.ai is a competitor in the space of real-time web data access and AI-driven search tools. While Exa.ai provides similar functionality, Tavily offers several key differentiators.

Key Differences:

- Data Coverage:

Exa.ai is known for its precision in content extraction, but Tavily has a broader coverage in terms of data sources, offering more comprehensive access to various web domains and content types. Tavily excels at real-time crawling, allowing it to capture live data from a wider variety of websites. - Flexibility:

Tavily provides a flexible API that supports a wide array of search configurations and extraction methods. While Exa.ai offers a more streamlined, “out-of-the-box” solution, Tavily’s customization options—like advanced search filtering and crawling—allow developers to fine-tune their use cases for specific needs. - Cost-Effectiveness:

Tavily offers more affordable pricing compared to Exa.ai, especially for smaller teams and startups. Tavily’s free plan (with 1,000 credits per month) and pay-as-you-go options make it an appealing choice for cost-conscious developers.

Example Use Case:

If you’re working on a small-to-medium AI project and need broad access to dynamic web data, Tavily might be a better choice due to its flexibility and affordable pricing.

8.2 Tavily vs. Linkup.so

Linkup.so is another competitor that provides real-time search capabilities, but its primary focus is on knowledge extraction and specialized data feeds from curated sources. Tavily, however, offers broader, customizable web crawling and data extraction from a variety of domains.

Key Differences:

- Custom Data Extraction:

Tavily provides highly customizable data extraction methods, allowing developers to pull specific content from a wide range of websites. Linkup, on the other hand, primarily offers structured data feeds from predefined sources. - Real-Time Crawling:

Tavily’s ability to crawl multiple web pages and gather large sets of data makes it stand out for projects that require broad data collection from diverse sources. Linkup, however, focuses on real-time search and may not provide the level of crawling flexibility offered by Tavily. - Integration Flexibility:

Tavily is designed to integrate smoothly with a variety of frameworks, including Python, Laravel, and Node.js, while Linkup primarily targets specific ecosystems. Tavily’s more developer-friendly API allows for faster integration across platforms.

Example Use Case:

For a market research tool that needs to crawl multiple sources (like blogs, news websites, and e-commerce sites) for pricing data and product details, Tavily is likely the better choice due to its crawling and extraction flexibility.

8.3 Tavily vs. Serper

Serper focuses on providing real-time search capabilities specifically designed for integration with AI chatbots and virtual assistants. While Serper is efficient for specific search tasks, Tavily offers more versatile features for broader use cases, including web crawling and data extraction.

Key Differences:

- Comprehensive Search Capabilities:

While Serper specializes in web search for chatbots, Tavily provides a more comprehensive solution for real-time data gathering, including structured data extraction and full-page crawling. Tavily supports not just search, but also more granular data retrieval from various online sources. - Scalability:

Tavily offers more scalable solutions, with higher limits for enterprises. Its enterprise plans support custom rate limits, advanced support, and the ability to handle high-volume requests, while Serper tends to focus more on smaller projects or low-volume queries. - Pricing Plans:

Tavily is often seen as more affordable compared to Serper, especially for projects that require continuous access to live data. Tavily’s flexible pricing ensures that businesses can scale their use without being burdened by high costs.

Example Use Case:

For a customer support chatbot that needs to access data across a broad range of websites, Tavily’s advanced extraction and data crawling capabilities give it the edge over Serper, which is more focused on searching predefined data sources.

8.4 Why Tavily Might Be the Right Choice

While each competitor offers a unique set of features, Tavily brings several key advantages to the table:

- Flexibility:

Tavily’s ability to search, crawl, and extract data from a wide variety of sources provides more flexibility compared to competitors that focus on narrower data sets or predefined sources. - Real-Time Data Access:

Tavily excels at providing live web data, making it ideal for AI applications that rely on the most up-to-date information. Its ability to crawl websites dynamically ensures that developers can get fresh content whenever they need it. - Affordable Pricing:

Tavily offers more competitive pricing for both small developers and large enterprises. With pay-as-you-go options and a free plan, Tavily is accessible to a wide range of users without the financial burden of high subscription fees. - Developer-Friendly Integration:

Tavily’s SDKs for Python, Laravel, and Node.js make it easy for developers to integrate the API into existing systems, with minimal setup and hassle. The straightforward setup and clear documentation ensure that developers can focus on building features instead of managing complex integrations.

Key Takeaways for This Section:

- Tavily provides a more flexible, affordable, and developer-friendly solution compared to competitors like Exa.ai, Linkup, and Serper.

- Tavily excels in real-time web data crawling and custom data extraction, making it ideal for a wide range of use cases, from AI chatbots to market research and e-commerce platforms.

- Tavily’s scalability and pricing options ensure it can handle both small and large-scale projects, giving it a competitive edge in the real-time data extraction space.

Section 9: Pros and Cons of Using Tavily

In this section, we’ll provide a balanced view of the strengths and potential limitations of using Tavily for real-time web search, crawling, and data extraction. Understanding both the advantages and challenges will help you decide whether Tavily is the right solution for your project.

9.1 Strengths of Tavily

Tavily offers several compelling benefits that make it an attractive choice for developers, businesses, and researchers looking for a real-time web data solution.

1. Real-Time Web Data Access

One of the standout features of Tavily is its ability to provide real-time web data, enabling applications to always work with the most current information. This is crucial for AI systems, chatbots, recommendation engines, and other data-driven applications that need to stay updated with the latest content.

Example:

For a financial service application that tracks market trends, Tavily ensures that the system pulls real-time stock prices and news articles, helping the service make more timely recommendations.

2. Flexible API and Customization

Tavily’s API is designed to be highly customizable, allowing developers to specify the exact types of data they need. Whether it’s filtering search results by date, location, or keywords, Tavily gives you the flexibility to fine-tune your requests.

Example:

In a real-time recommendation engine for an e-commerce platform, Tavily’s ability to customize the search parameters ensures that the platform can provide personalized recommendations based on the latest reviews and pricing data.

3. Affordable Pricing and Free Plan

Tavily is cost-effective, offering flexible pay-as-you-go and subscription plans. For developers or small businesses just starting, Tavily’s free plan (1,000 credits per month) provides ample opportunity to test the API before committing to a paid plan.

Example:

For startups and individual developers, Tavily’s affordable pricing and free credits offer a low barrier to entry, allowing them to experiment and build prototypes without upfront costs.

4. Scalability

Tavily’s infrastructure is built to scale, making it suitable for both small projects and enterprise-level applications. The ability to adjust rate limits and credits based on usage makes Tavily a scalable solution for businesses that grow over time.

Example:

For an enterprise that needs to process large amounts of web data daily, Tavily offers enterprise-level solutions with customized rate limits, ensuring uninterrupted access to real-time data at scale.

5. Wide Integration Support

Tavily offers integration with popular development environments such as Python, Laravel, and Node.js, making it easy to incorporate into existing tech stacks. The ease of integration and clear documentation speeds up the development process, saving developers time.

Example:

A Python-based AI chatbot can seamlessly integrate with Tavily’s Python SDK to fetch live data, ensuring the chatbot provides real-time responses based on the most up-to-date information.

9.2 Potential Limitations or Drawbacks

While Tavily offers many benefits, it’s also important to consider its limitations to determine if it’s the best fit for your specific needs.

1. Rate Limits on the Free and Lower-Tier Plans

While Tavily offers a free plan and affordable pricing, the rate limits on these plans can be restrictive for larger projects. Developers may need to upgrade to higher-tier plans if they require more extensive API usage or higher throughput.

Example:

If you’re working on a high-traffic application, such as a real-time news aggregation service, you might quickly hit the API rate limits on the free plan, requiring an upgrade to a paid plan with higher credits and priority support.

2. Limited Data Coverage for Certain Niches

Tavily excels at crawling widely accessible web data, but for certain niche industries or highly specialized content, the breadth of data might not always be sufficient. It may not be as exhaustive as services tailored to specific verticals.

Example:

In cases where you’re looking for highly specialized academic papers or deeply technical data from niche sources, Tavily might not always have the same depth as competitors who focus on those specific types of data.

3. Reliance on Web Crawling for Data Extraction

Tavily’s reliance on web crawling for data extraction can sometimes result in inconsistencies, especially if the websites you are crawling from have dynamic content or frequently change their layout. This may require ongoing maintenance to ensure the data extraction remains accurate and functional.

Example:

For an e-commerce platform that scrapes product data from multiple websites, frequent layout changes on those websites could lead to broken extraction logic, requiring periodic updates to your scraping logic.

4. API Latency

While Tavily’s performance is generally fast, there may be occasional latency issues when fetching data from websites, especially if the websites being crawled have slow response times or are experiencing downtime. Real-time data fetching may be affected by these factors, impacting the overall user experience.

Example:

In a real-time customer support system, delayed responses due to web crawling latency could lead to slower replies, especially when fetching data from multiple sources at once.

Key Takeaways for This Section:

Strengths:

- Tavily provides real-time web data access, making it ideal for dynamic applications that need the latest information.

- The API is highly customizable, allowing developers to refine their queries for precise data retrieval.

- Tavily offers affordable pricing and a free plan, making it accessible for startups and small businesses.

- It’s scalable, supporting projects from small to enterprise-level.

- Tavily integrates easily with popular programming environments like Python, Laravel, and Node.js.

Limitations:

- Free and lower-tier plans come with rate limits, which may be restrictive for large-scale applications.

- Data coverage may be limited for specialized industries or niche content.

- Reliance on web crawling can lead to inconsistent results if websites change their structure or content.

- API latency may occasionally impact performance, especially with slower websites or large-scale requests.

Section 10: Conclusion

In this final section, we’ll summarize the key benefits and limitations of Tavily, provide a recommendation for its use, and highlight how Tavily can help you build more powerful, data-driven AI applications.

10.1 Summary of Tavily’s Key Benefits

Tavily stands out as an exceptional tool for developers, businesses, and researchers looking for real-time web search, data extraction, and crawling capabilities. Here’s a quick summary of what makes Tavily a great choice:

- Real-Time Web Data Access: Tavily gives you access to live web data, ensuring your applications always have the most up-to-date information, whether it’s for news aggregation, market research, or customer support.

Learn more: Tavily Real-Time Data Access. - Flexible and Customizable API: Tavily allows you to tailor your queries with advanced search filters, giving you more control over the data you retrieve. This flexibility is crucial for projects that require specific data points from diverse sources.

Explore the API documentation: Tavily API Documentation. - Affordability: With a free plan and pay-as-you-go options, Tavily is an affordable choice for startups and small developers, without compromising on functionality or flexibility.

Check pricing options: Tavily Pricing. - Scalability: Tavily’s infrastructure is built to scale, making it suitable for both small-scale projects and large enterprise solutions. Whether you’re working on a small chatbot or a high-traffic data-driven application, Tavily can accommodate your needs.

Learn more about scalability: Tavily Enterprise Solutions. - Wide Integration Support: Tavily offers integration with popular development environments such as Python, Laravel, and Node.js, ensuring that developers can easily incorporate its capabilities into their existing systems.

Find more SDKs and tools here: Tavily SDKs.

10.2 Final Thoughts and Recommendations

After a thorough review of Tavily’s features, strengths, and limitations, it is clear that Tavily is an excellent choice for developers and businesses looking for a real-time web search API. Tavily shines in scenarios where you need to gather live data from the web for AI-driven applications, research, or content aggregation.

Use Tavily if:

- You need real-time access to web data for applications like chatbots, recommendation engines, news aggregators, or market research tools.

- You’re working on a dynamic project that needs to stay up-to-date with current information, such as e-commerce platforms or financial services.

- You want an affordable and flexible solution with the option to scale as your project grows, with free credits to get started.

Consider alternatives if:

- Your project requires access to niche data sources that Tavily may not cover, such as specialized academic papers or highly specific industry data.

- You are working with high-volume data and need unlimited API access, as Tavily’s free and lower-tier plans may have rate limits that could be restrictive for large-scale applications.

Overall, Tavily is a powerful, cost-effective solution that can enhance the functionality of AI applications by enabling them to pull in real-time, accurate data from the web. It’s especially valuable for developers working with Python, Laravel, and Node.js, and it is flexible enough to accommodate a wide range of use cases, from e-commerce to customer service and research applications.

Final Recommendation:

If your project requires dynamic, real-time data and you need an API that offers both flexibility and affordability, Tavily is highly recommended. Its comprehensive API, ease of integration, and scalable pricing make it a strong contender for developers and businesses looking to build smarter, more responsive AI-powered applications.

For more information and to start integrating Tavily into your project, visit their official website.

Comments